index.md 2.2KB

title: Web Decay Graph

url: https://www.tbray.org/ongoing/When/201x/2015/05/25/URI-decay

hash_url: b8dd4f6d72

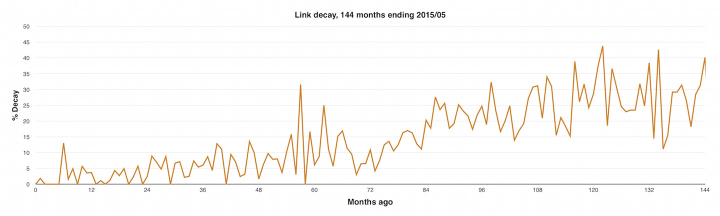

I’ve been writing this blog since 2003 and in that time have laid down, along with way over a million words, 12,373 hyperlinks. I’ve noticed that when something leads me back to an old piece, the links are broken disappointingly often. So I made a little graph of their decay over the last 144 months.

The “% Decay” value for each value of “Months Ago” is the percentage of links made in that month that have decayed. For example, just over 5% of the links I made in the month 60 months before May 2015, i.e. May 2010, have decayed.

Longer title · “A broad-brush approximation of URI decay focused on links selected for blogging by a Web geek with a camera, computed using a Ruby script cooked up in 45 minutes.” Mind you, the script took the best part of 24 hours to run, because I was too lazy to make it run a hundred or so threads in parallel.

I suppose I could regress the hell out of the data and get a prettier line but the story these numbers are telling is clear enough.

Another way to get a smoother curve would be for someone at Google to throw a Map/Reduce at a historical dataset with hundreds of billions of links.

This is a very sad graph · But to be honest I was expecting worse. I wonder if, a hundred years after I’m dead, the only ones that remain alive will begin with “en.wikipedia.org”?