index.md 13KB

title: A Codebase is an Organism

url: http://www.meltingasphalt.com/a-codebase-is-an-organism/

hash_url: 7d25593fd8

I.

Here’s what no one tells you when you graduate with a degree in computer science and take up a job in software engineering:

The computer is a machine, but a codebase is an organism.

This will make sense to anyone who’s worked on a large or even medium-sized software project — but it’s often surprising to new grads. Why? Because nothing in your education prepares you for how to deal with an organism.

Computer science is all about how to control the machine: to make it do exactly what you want, during execution, on the time scale of nano- and milliseconds. But when you build real software — especially as part of a team — you have to learn how to control not only the (very obedient) machine, but also a large, sprawling, and often unruly codebase.

This turns out to require a few ‘softer’ skills. Unlike a computer, which always does exactly what it’s told, code can’t really be bossed around. Perhaps this is because code is ultimately managed by people. But whatever the reason, you can’t tell a codebase what to do and expect to be obeyed. Instead, the most you can do (in order to maximize your influence) is try to steward the codebase, nurture it as it grows over a period of months and years.

When you submit a CS homework assignment, it’s done. Fixed. Static. Either your algorithm is correct and efficient or it’s not. But push the same algorithm into a codebase and there’s a very real sense in which you’re releasing it into the wild.

Out there in the codebase, all alone, your code will have to fend for itself. It will be tossed and torn, battered and bruised, by other developers — which will include yourself, of course, at later points in time. Exposed to the elements (bug fixes, library updates, drive-by refactorings), your code will suffer all manner of degradations, “the slings and arrows of outrageous fortune… the thousand natural shocks that flesh is heir to.”

II.

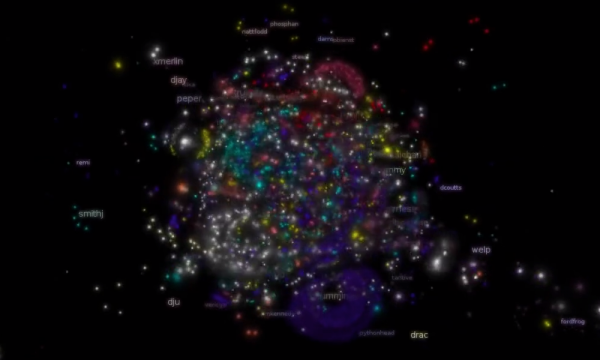

Whether you’re a developer or otherwise, nothing will give you a better feel for code’s organic nature than watching a codeswarm visualization — an animation of the commit history of a codebase.

Here’s a still of the Gentoo Linux codeswarm:

But a static image hardly does this any justice. The whole point is to see the evolution of the thing — in HD if you can. “Swarm” ends up being a pretty accurate description: it’s like watching a flock of developers attacking a hive of text files. Well, “attacking” or “mating with” — I can’t really tell which.

(There’s also a nice rendering of the Eclipse project codeswarm on Vimeo.)

What a codeswarm makes vivid is this: that managing a codebase requires an entirely different mindset from the one needed to control a machine. It requires knowing how to deal with the unpredictable flux of a complex, wild organism.

III.

The organic nature of code manifests itself in the dual forces of growth and decay.

Let’s start with decay. Realizing that code can wither, decay, or even die leads us to the nursing metaphor, or codebase as sick patient.

Code doesn’t decay on its own, of course. Left completely untouched, it will survive as long as you care to archive it. Decay — often called code rot or software rot — only sets in when changes are made, either to the code itself or to any of its dependencies. So as a rule of thumb, we can say that most code is decaying during most of its existence. It’s like entropy. You never ‘win’ against entropy; you just try to last as long as you can.

In a healthy piece of code, entropic decay is typically staved off by dozens of tiny interventions — bug fixes, test fixes, small refactors, migrating off a deprecated API, that sort of thing. These are the standard maintenance operations that all developers undertake on behalf of code that they care about. It’s when the interventions stop happening, or don’t happen often enough, that code rot sets in.

We can assess a module in terms of ‘risk factors’ for this kind of decay. The older a module is, for example, the more likely it is to be suffering from code rot. More important than age, however, is the time since last major refactor. (Recently-refactored code is a lot like new code, for good or ill.) Also, the more dependencies a module has, and the more those dependencies have recently changed, the more likely the module is to have gone bad.

But all of these risk factors pale in importance next to how much execution a piece of code has been getting. Execution by itself isn’t quite enough, though — it has to be in a context where someone is paying attention to the results. This type of execution is also known as testing.

Testing can take many forms — automated or manual, ad hoc developer testing, and even ‘testing’ through use in production. As long as the code is getting executed in a context where the results matter, it counts. The more regularly this happens, of course, the better.

I find it useful to think of execution as the lifeblood of a piece of code — the vital flow of control? electronic pulse? — and testing as medical instrumentation, like a heart rate monitor. You never know when a piece of code, which is rotting all the time, will atrophy in a way that causes a serious bug. If your code is being tested regularly, you’ll find out soon and will be able to intervene. But without testing, no one will notice that your code has flatlined. Errors will begin to pile up. After a month or two, a module can easily become so rotten that it’s impossible to resuscitate.

Thus teams are often confronting the uncomfortable choice between a risky refactoring operation and clean amputation. The best developers can be positively gleeful about amputating a diseased piece of code (even when it’s their own baby, so to speak), recognizing that it’s often the best choice for the overall health of the project. Better a single module should die than continue to bog down the rest of the project.

IV.

Now you might assume that while decay is problematic, growth is always good. But of course it’s not so simple.

Certainly it’s true that a project needs to grow in order to become valuable, so the problem isn’t growth per se, but rather unfettered or opportunistic growth. Haphazard growth. Growth by means of short-sighted, local optimizations. And this kind of growth seems to be the norm — perhaps because developers are often themselves short-sighted and opportunistic, if not outright lazy. But even the best, most conscientious developers fall pitifully short of making globally-optimal decisions all the time.

Left to ‘its’ own devices, then, a codebase can quickly devolve into a tangled mess. And the more it grows, the more volume it has to maintain against the forces of entropy. In this way, a project can easily collapse under its own weight.

For these reasons, any engineer worth her salt soon learns to be paranoid of code growth.

She assumes, correctly, that whenever she ceases to be vigilant, the code will get itself into trouble. She knows, for example, that two modules will tend to grow ever more dependent on each other unless separated by hard (‘physical’) boundaries. She’s had to do that surgery — to separate two modules that had become inappropriately entangled with each other. Afraid of such spaghetti code (rat’s nests), she strives relentlessly to arrange her work (and the work of others) into small, encapsulated, decoupled modules.

Faced with the necessity of growth but also its dangers, the seasoned engineer therefore seeks a balance between nurture and discipline. She knows she can’t be too permissive; coddled code won’t learn its boundaries. But she also can’t be too tyrannical. Code needs some freedom to grow at the optimal rate.

In this way, building software isn’t at all like assembling a car. In terms of managing growth, it’s more like raising a child or tending a garden.

V.

Once you develop an intuition for the organic nature of a codebase, a lot of the received wisdom becomes clear and easier to understand. You realize that a lot of software engineering principles are corollaries or specific applications of the same general idea — namely that:

Successful management of a codebase consists in defending its long-term health against the dangers of decay and opportunistic growth.

It very quickly becomes obvious, for example, that you should test the living daylights out of your code, using as much automation as possible. How else are you to prevent code rot from setting in? How else will you catch bugs as soon as they sprout?

The organic nature of code also suggests that you should know your code smells. A code smell, according to Wikipedia, is

any symptom in the source code of a program that possibly indicates a deeper problem. Code smells are usually not bugs [emphasis mine] — they are not technically incorrect and don’t currently prevent the program from functioning. Instead, they indicate weaknesses in design that may be slowing down development or increasing the risk of bugs or failures in the future.

“Not a bug” means that a code smell, whatever it is, won’t be causing problems during execution, on the machine — at least not right now. Instead, it’s going to cause problems during evolution of the codebase. It might invite entropy to accumulate somewhere, for example, or put unnecessary constraints on growth, or allow something to grow where it shouldn’t. And like a doctor trying to detect a disease in its early stages, the sooner you catch a whiff of bad code, the fewer problems you’ll have down the line.

The organic, evolutionary nature of code also highlights the importance of getting your APIs right. By virtue of their public visibility, APIs can exert a lot of influence on the future growth of the codebase. A good API acts like a trellis, coaxing the code to grow where you want it. A bad API is like a cancer, and it will metastasize all over your codebase. Some quick examples:

- If you expose a method that doesn’t handle nulls properly, then you force each of your callers to branch on null before calling your API.

- If you design an asynchronous API with callbacks, you’ll force all your callers to write multithreaded code. (Sometimes this is clearly the right decision, but when it’s not, you’re infecting the rest of the codebase with your bad decision.)

- If your API passes around a “grab bag” object, you’re all but inviting fellow devs to use the “spooky action at a distance” anti-pattern.

Finally, here’s an idea that took me many years to appreciate on a gut level (but which is completely obvious now in hindsight): the benefits of failing fast. What I should have understood is that failure (on unexpected inputs) reflects a conflict of interest between the computer and the codebase.

From the perspective of the machine on which the code is executing right now, it’s better not to fail and hope that everything will be OK — hope that some other part of the stack will handle the failure gracefully. Maybe we can recover. Maybe the user won’t notice. Why crash when we could at least try to keep going? And everything in my CS education taught me to do what’s right for the machine.

But from the perspective of the codebase — whose success depends not on any single execution, but rather on long-term health — it’s far better to fail fast (and loud), in order to call immediate attention to the problem so it can be fixed.

Coddled code will fester. Spare the rod, spoil the child. These are the metaphors you need to make sense of a codebase.

___

Based off some remarks originally published at Ribbonfarm.