index.md 8.4KB

title: Ask LukeW: New Ways into Web Content

url: https://www.lukew.com/ff/entry.asp?2008

hash_url: dc43f3c837

Large language (AI) models allow us to rethink how to build software and design user interfaces. To that end, we made use of these new capabilities to create a different way of interacting with this site at: ask.lukew.com

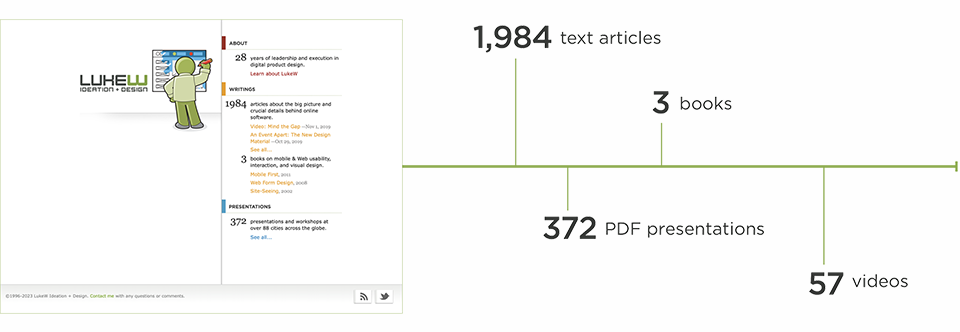

Though quiet recently, this site built up a decent amount of content over the past 27 years. Specifically, there's nearly 2,000 text articles, 375 presentations, 60 videos, and 3 books worth of explorations and explanations about all forms of digital product design from early Web sites to Mobile apps to Augmented Reality experiences.

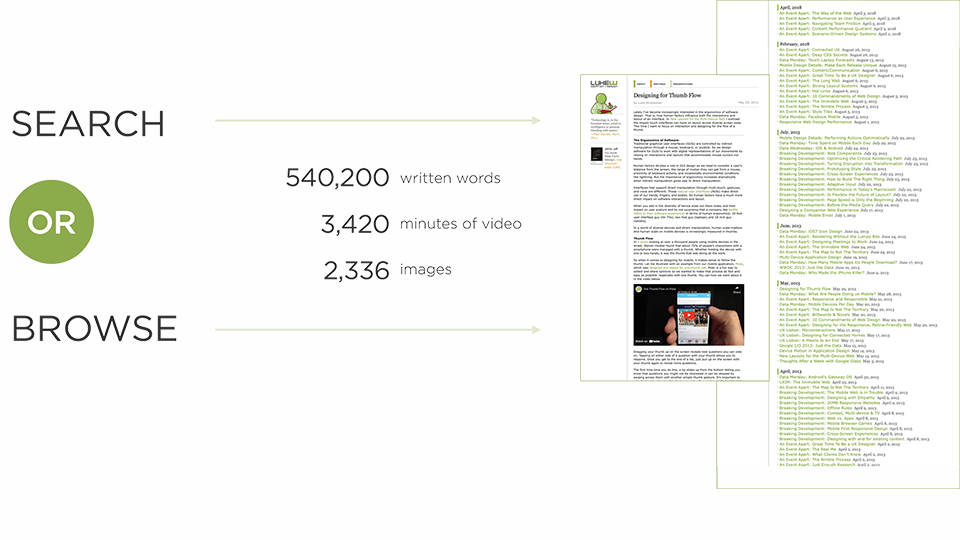

Anyone interested in these materials, has essentially two options: search or browse. Searching (primarily through Google) gets people to a specific article, presentation, or video when they have a sense of what they're looking for. Browsing (on this site or other sites with links to this one) helps people discover things they might not have been explicitly looking for.

But with over half a million written words, three and a half thousand minutes of video, and thousands of images it's hard to know what's available, to connect related content, and ultimately get the most value out of this site.

Enter large-scale AI models for language (LLMs). By making use of these models to perform a variety of language operations, we can re-index the content on this site by concepts using embeddings, and generate new ways to interact with it.

We make use of large-language models to:

- summarize articles

- extract key concepts from articles

- create follow-on questions to ask with specific articles

- make exploratory questions to expose people to new content

- generate answers in response to what people ask

This combination of language operations adds up to a very different new way to experience the content on lukew.com

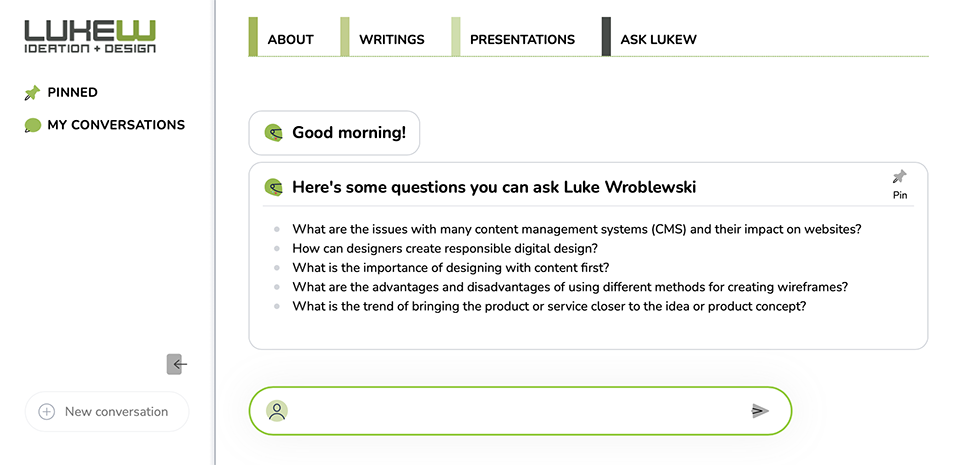

Ask LukeW starts off with a series of suggested questions that change each time someone loads the page. This not only helps with the "what should I ask?" problem of empty text fields but is also a compelling way to explore what the site has to offer. Of course, someone can start with their own specific question. But in testing, many folks gravitate to the suggestions first, which helps expose people to more of the breadth and depth of available content.

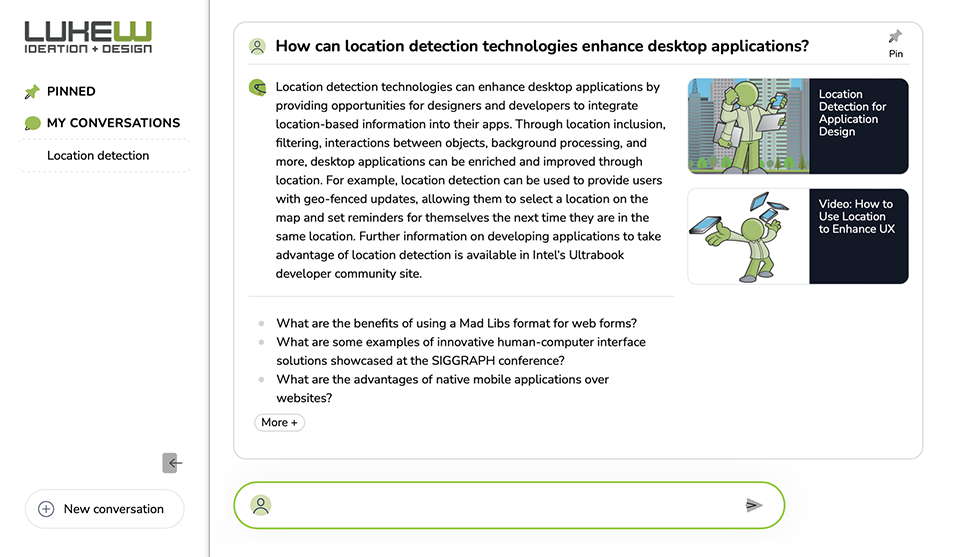

After someone selects a question or types their own question, we generate an answer using the corpus of information on lukew.com. These results tend to be more opinionated than what a large language model operating solely on a much bigger set of content (like the Web) provides, even with prompt engineering to direct it toward specific kinds of answers (i.e. UI design-focused).

The content we use to answer someone's question can come from one or more articles so we give provide visual sources to make this clear. In the current build, we're citing Web pages but PDFs and videos are next. It's also worth noting that we follow-up each answer with additional suggested questions to once again give people a better sense of what they can ask next. No dead ends.

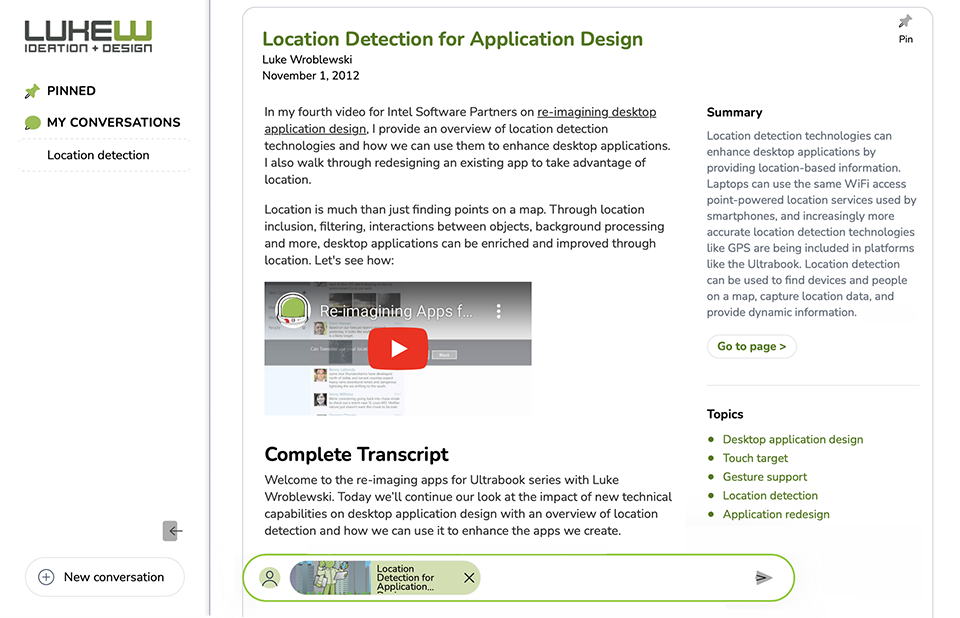

If someone wants to go deeper into any of the sourced materials, they can select the card and get an article-specific experience. Here we make use of LLM language operations to create a summary, extract related topics and provide suggested questions that the article can answer. People can ask questions of just this document (as indicated by the green article "chip" in the question bar) or go back to site-wide questions by tapping the close (x) icon.

As the number of answers builds up, we collapse each one automatically, so people can focus on the current question they've asked. This also makes it easier to scroll through a long conversation and pick out answers from short summaries consisting of the question and the first two lines of its answer.

People can also pin individual question and answer pairs to save them for later and come back to previous conversations in addition to making new ones using the menu bar on the left.

While there's a number of features in the Ask LukeW interface, it's mostly a beta. We don't save state from question to question so the kind of ongoing dialog people may expect from ChatGPT isn't there yet, pinned answers and saved conversations are only done locally (cookie-based) and as mentioned before, PDFs and videos aren't yet part of the index.

Despite that, it's been interesting to explore how an existing body of content can gain new life using large-language model technology. I've been regularly surprised and interested by questions like:

- How can progressive enhancement be used in software development?

- What are the central mental traits that people unconsciously display through the products they buy?

- What are the design considerations for touch-based apps for kids?

- What is small multiples and how can it help people make sense of large amounts of information quickly and easily?

- What is the debate around the utility of usability testing in design?

And I wrote all this content! Since that happened across a quarter century, maybe it's not that surprising that I don't remember it all. Anyhow... hope you also enjoy trying out ask.lukew.com and feel free to send any ideas or comments over.

Acknowledgments

Big thanks to Yangguang Li (front end), Thanh Tran (design), and Sam Pullara (back end) in helping pull together this exploration.