index.md 31KB

title: The Expanding Dark Forest and Generative AI

url: https://maggieappleton.com/ai-dark-forest

hash_url: 140458968f

archive_date: 2024-02-19

og_image: https://maggieappleton.com//images/og/fe37968757d0ac03e4b01c7496b2e8ac.png

description: Proving you’re a human on a web flooded with generative AI content

favicon: https://maggieappleton.com//images/favicon/favicon.ico

language: en_GB

People who have heard of GPT-3 / ChatGPT, and are vaguely following the advances in machine learning, large language models, and image generators. Also people who care about making the web a flourishing social and intellectual space.

The

of the web points to the increasingly life-like but life-less state of being online. Most open and publicly available spaces on the web are overrun with bots, advertisers, trolls, data scrapers, clickbait, keyword-stuffing “content creators,” and algorithmically manipulated junk.It’s like a dark forest that seems eerily devoid of human life – all the living creatures are hidden beneath the ground or up in trees. If they reveal themselves, they risk being attacked by automated predators.

Humans who want to engage in informal, unoptimised, personal interactions have to hide in closed spaces like invite-only Slack channels, Discord groups, email newsletters, small-scale blogs, and

. Or make themselves and algorithmically incoherent in public venues.

That dark forest is about to

Over the last six months, we’ve seen a flood of LLM copywriting and content-generation products come out:

, , , and are just a few. They’re designed to pump out advertising copy, blog posts, emails, social media updates, and marketing pages. And they’re really good at it.These models became competent copywriters much faster than people expected – too fast for us to fully process the implications. Many people had their come-to-Jesus moment a few weeks ago when OpenAI released

, a slightly more capable version of GPT-3 with an accessible chat-bot style interface. The and made clear how few people had been tracking the progress of these models.To complicate matters, language models are not the only mimicry machines gathering speed right now. Image generators like

, , and have been on a year-long sprint. In January they could barely render a low-resolution, disfigured human face. By the autumn they reliably produced images indistinguishable from the work of human photographers and illustrators.

A Generated Web

There’s a swirl of optimism around how these models will save us from a suite of boring busywork: writing formal emails, internal memos, technical documentation, marketing copy, product announcement, advertisements, cover letters, and even negotiating with medical

.But we’ll also need to reckon with the trade-offs of making insta-paragraphs and 1-click cover images. These new models are poised to flood the web with generic, generated content.

You thought the first page of Google was bunk before? You haven’t seen Google where SEO optimizer bros pump out billions of perfectly coherent but predictably dull informational articles for every longtail keyword combination under the sun.

Marketers, influencers, and growth hackers will set up

pipelines that auto-publish a relentless and impossibly banal stream of LinkedIn #MotivationMonday posts, “engaging” tweet 🧵 threads, Facebook outrage monologues, and corporate blog posts.It goes beyond text too:

, TikTok clips, podcasts, slide decks, and Instagram stories can all be generated by together ML systems. And then for each medium.We’re about to drown in a sea of pedestrian takes. An explosion of noise that will drown out any signal. Goodbye to finding original human insights or authentic connections under that pile of cruft.

Many people will say we already live in this reality. We’ve already become skilled at sifting through unhelpful piles of “optimised content” designed to gather clicks and advertising impressions.

4chan proposed

years ago: that most of the internet is “empty and devoid of people” and has been taken over by artificial intelligence. A milder version of this theory is simply that we’re overrun . Most of us take that for granted at this point.But I think the sheer volume and scale of what’s coming will be meaningfully different. And I think we’re unprepared. Or at least, I am.

Passing the Reverse Turing Test

Our new challenge as little snowflake humans will be to prove we aren’t language models. It’s the reverse

.After the forest expands, we will become deeply sceptical of one another’s realness. Every time you find a new favourite blog or Twitter account or Tiktok personality online, you’ll have to ask: Is this really a whole human with a rich and complex life like mine? Is there a being on the other end of this web interface I can form a relationship with?

Before you continue, pause and consider: How would you prove you’re not a language model generating predictive text? What special human tricks can you do that a language model can’t?

1. Triangulate objective reality

As language models become increasingly capable and impressive, we should remember they are, at their core, linguistic

. They cannot (yet) reason like a human.They do not have beliefs based on evidence, claims, and principles. They cannot consult external sources and run experiments against objective reality. They cannot go outside and touch grass.

In short, they do not have access to the same shared reality we do. They do not have embodied experiences, and cannot sense the world as we can sense it; they don’t have vision, sound, taste, or touch. They cannot feel emotion or tightly hold a coherent set of values. They are not part of cultures, communities, or histories.

They are a language model in a box. If a historical event, fact, person, or concept wasn’t part of their training data, they can’t tell you about it. They don’t know about events that happened after a certain cutoff date.

I found Murray Shanahan’s paper on

(2022) full of helpful reflections on this point:Humans are members of a community of language-users inhabiting a shared world, and this primal fact makes them essentially different to large language models. We can consult the world to settle our disagreements and update our beliefs. We can, so to speak, “triangulate” on objective reality.

Murray Shanahan – Talking About Large Language Models

This leaves us with some low-hanging fruit for humanness. We can tell richly detailed stories grounded in our specific contexts and cultures: place names, sensual descriptions, local knowledge, and, well the je ne sais quoi of being alive. Language models can decently mimic this style of writing but most don’t without extensive prompt engineering. They stick to generics. They hedge. They leave out details. They have trouble maintaining a coherent sense of self over thousands of words.

Hipsterism and recency bias will help us here. Referencing obscure concepts, friends who are real but not famous, niche interests, and recent events all make you plausibly more human.

2. Be original, critical, and sophisticated

Easier said than done, but one of the best ways to prove you’re not a predictive language model is to demonstrate critical and sophisticated thinking.

Language models spit out text that sounds like a B+ college essay. Coherent, seemingly comprehensive, but never truly insightful or original (at least for now).

In a repulsively evocative metaphor, they engage in “

epistemology.” Language models regurgitate text from across the web, which some humans read and recycle into “original creations,” which then become fodder to train other language models, and around and around we go recycling generic ideas and arguments and tropes and ways of thinking.Hard exiting out of this cycle requires coming up with unquestionably original thoughts and theories. It means seeing and synthesising patterns across a broad range of sources: books, blogs, cultural narratives served up by media outlets, conversations, podcasts, lived experiences, and market trends. We can observe and analyse a much fuller range of inputs than bots and generative models can.

It will raise the stakes for everyone. As both consumers of content and creators of it, we’ll have to foster a greater sense of critical thinking and scepticism.

This all sounds a bit rough, but there’s a lot of hope in this vision. In a world of automated intelligence, our goalposts for intelligence will shift. We’ll raise our quality bar for what we expect from humans. When a machine can pump out a great literature review or summary of existing work, there’s no value in a person doing it.

3. Develop creative language quirks, dialects, memes, and jargon

The linguist

argued there are two kinds of language:- La langue is the formal concept of language. These are words we print in the dictionary, distribute via educational institutions, and reprimand one another for getting it wrong.

- La parole is the speech of everyday life. These are the informal, diverse, and creative speech acts we perform in conversations, social gatherings, and text to the group WhatsApp. This is where language evolves.

We have designed a system that automates a standardised way of writing. We have codified la langue at a specific point in time.

What we have left to play with is la parole. No language model will be able to keep up with the pace of weird internet lingo and memes. I expect we’ll lean into this. Using neologisms, jargon, euphemistic emoji, unusual phrases, ingroup dialects, and memes-of-the-moment will help signal your humanity.

Not unlike teenagers using language to subvert their elders, or oppressed communities developing dialects that allow them to safely communicate amongst themselves.

4. Consider institutional verification

This solution feels the least interesting. We’re already hearing rumblings of how “verification” by centralised institutions or companies might help us distinguish between meat brains and metal brains.

The idea is something like this: you show up in person to register your online accounts or domains. You then get some kind of special badge or mark online legitimising you as a Real Human. It may or may not be on the blockchain somehow.

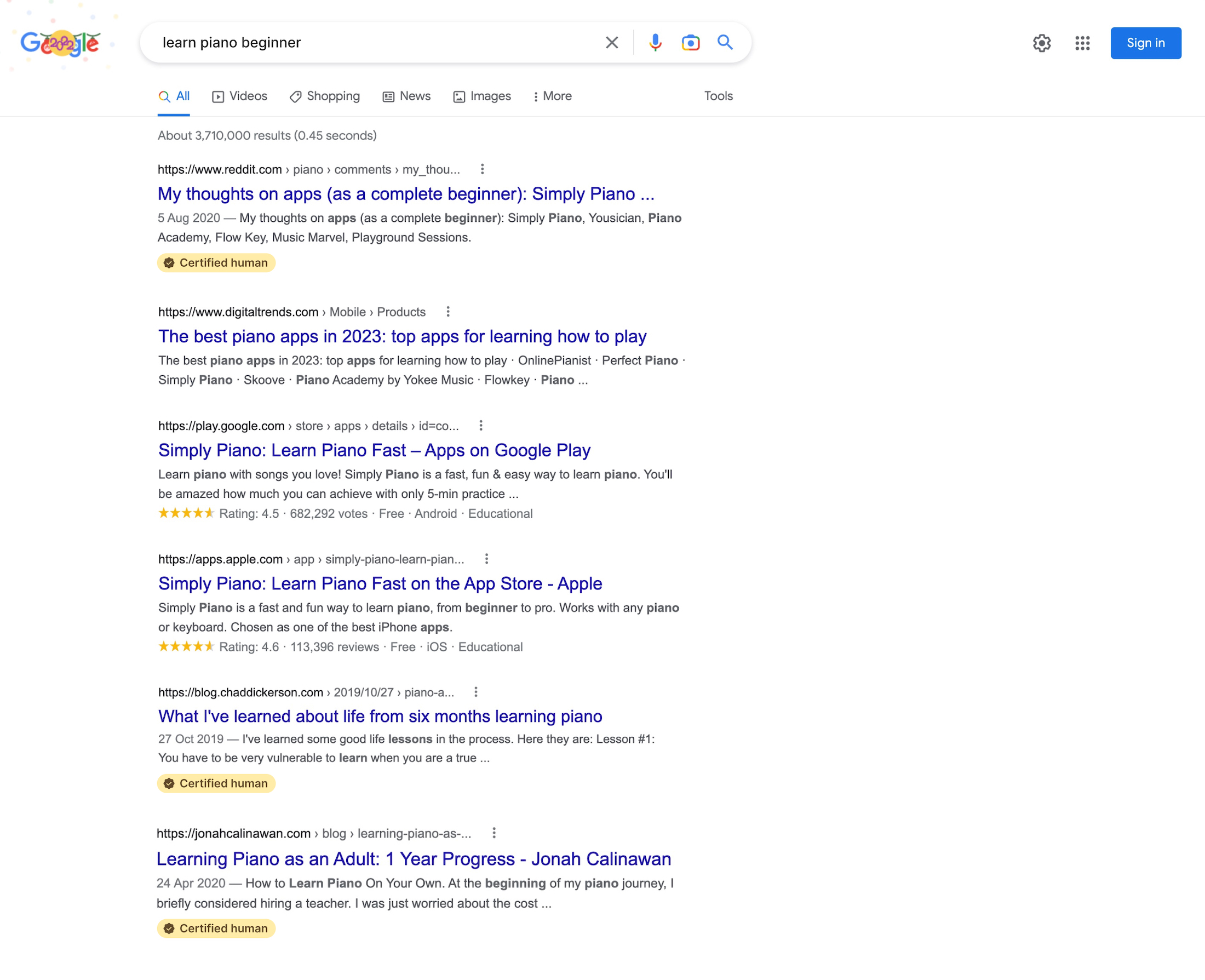

Google might look something like this:

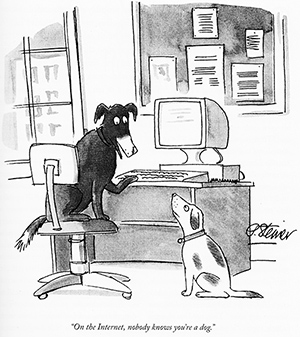

The whole thing seems fraught with problems, susceptible to abuse, and ultimately impractical. Would it even be the web if everyone knew you were really a dog?

5. Show up in meatspace

The final edge we have over language models is that we can prove we’re real humans by showing up IRL with our real human bodies. We can arrange to meet Twitter mutuals offline over coffee. We can organise meetups and events and conferences and unconferences and hangouts and pub nights.

In

, Lars Doucet proposed several knock-on effects from this offline-first future. We might see increased of anti-screen culture, as well as price increases in densely populated areas.For the moment we can still check humanness over Zoom, but live video generation is getting good enough that I don’t think that defence will last long.

There are, of course, many people who can’t move to an offline-first life; people with physical disabilities. People who live in remote, rural places. People with limited time and caretaking responsibilities for the very young or the very old. They will have a harder time verifying their humanness online. I don’t have any grand ideas to help solve this, but I hope we find better solutions than my paltry list.

As the forest grows darker, noisier, and less human, I expect to invest more time in in-person relationships and communities. And while I love meatspace, this still feels like a loss.