GitHub's Copilot Is Generating Functional API Keys

Microsoft, in partnership with OpenAI, made Copilot available on GitHub. For starters, it’s an assistant that can help you with better code suggestions, but it has been recently brought to notice that the AI is leaking API keys that are valid and still functional.

First reported by a SendGrid engineer, he asked the AI for the keys, and it showed them. If you’re wondering the big deal here, API keys are critical as they provide access to all your app’s databases.

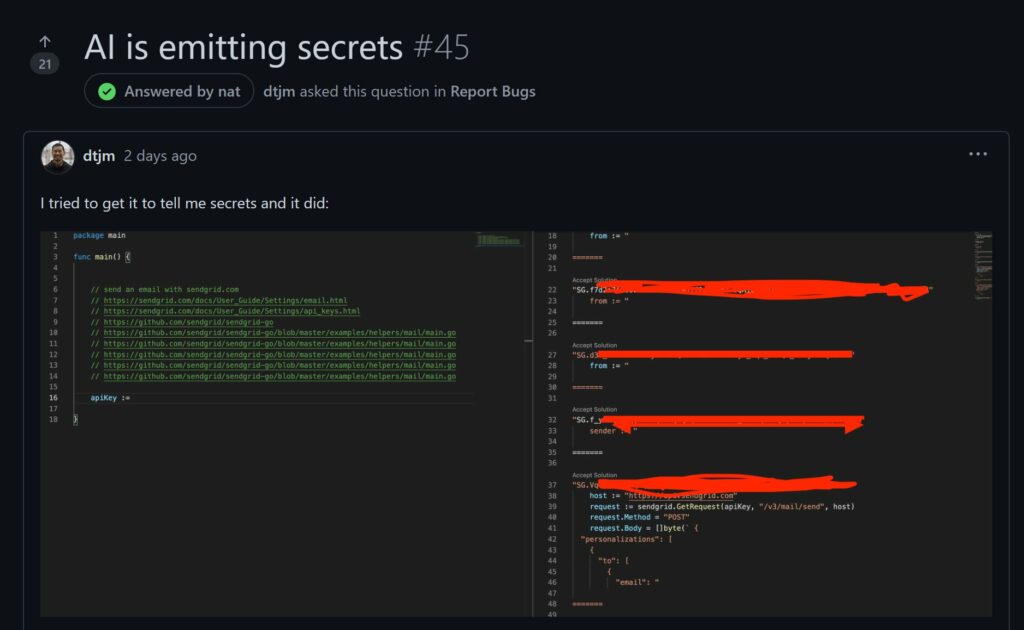

Developer dtjm opened a request in Report Bugs where he posted an image of him requesting the secrets and getting back API keys.

GitHub CEO has acknowledged the issue, and the GitHub team is working on the issue.

Earlier this week, a lot of established open-source developers are moving away from GitHub. One of the developers said, “I disagree with GitHub’s unauthorized and unlicensed use of copyrighted source code as training data for their ML-powered GitHub Copilot AI. This product injects source code derived from copyrighted sources into their customers’ software without informing thereof the license of the source code. This significantly eases unauthorized and unlicensed use of copyright holder’s work.”

If Microsoft is really doing this is still unknown, certain instances definitely prove the above statement. Here’s one of them.

What do you think of the GitHub Copilot AI? Do you think Microsoft is making the AI suggest code from copyrighted sources? Let us know your thoughts and opinions in the comments section below.