Source originale du contenu

No one’s coming. It’s up to us.

Adapted from “We Are The Very Model Of Modern Humanist Technologists”, a lightning talk given at #foocamp 2017 in San Francisco on Saturday, November 4th, 2017. For context, a lightning talk is normally around five minutes long.

Last autumn, I was invited to Foo Camp, an unconference organized by O’Reilly Media. O’Reilly have held Foo Camps since 2003, and the events are invitation-only (hence the name: Friends of O’Reilly).

Foo Camp 2017’s theme was about how we (i.e. the attendees and, I understand, technologists in general) could “bring about a better future by making smart choices about the intersection of technology and the economy.”

This intersection of technology and the economy (which is a bit of a, shall we say, passive way of describing the current economic change and disruption) is clearly an interest of Tim O’Reilly’s (the eponymous O’Reilly); he’s recently written a book, WTF? What’s the Future and Why It’s Up to Us, about the subject.

One part of the unconference structure of a Foo Camp is the lightning talks: quick, 5 minute “provocative” talks about a subject. I’d been thinking and writing about technology’s role in society for a while in my newsletter (admittedly from an amateur, non-academic point of view), so Foo Camp’s lightning talks provided a good opportunity to pull my thoughts together in front of an audience of peers and experts.

The talk I submitted was titled “The very model of modern humanist technologists”, and this essay is an adaptation for a wider audience.

While the theme of 2017’s Foo Camp was how “we” could bring about a better future by making smart choices about the intersection of technology and the economy, I made the case that the only way for “us” to bring about that better future is through some sort of technological humanism.

To make that case — ultimately a case for restraint and more considered progress rather than the stereotypical call to action to move fast and break things — I decided to tell a personal story.

You see, when I make this case for humane technology, I’m making it as both a technologist and a non-technologist.

In this photograph, I’m probably around 4 or 5 years old.

My parents moved from Hong Kong to the United Kingdom sometime in the 1970s as part of the diaspora. Their parents “sent them away”: the idea being that their children, and thus their grandchildren would have access to a better education and a better life in the United Kingdom.

Both my parents are academics — my dad’s a professor of manufacturing and my mother has a doctorate in educational linguistics. Education was important to them, and in the way that we learn about what’s important by watching the adults in our lives, what was important to them became important to me.

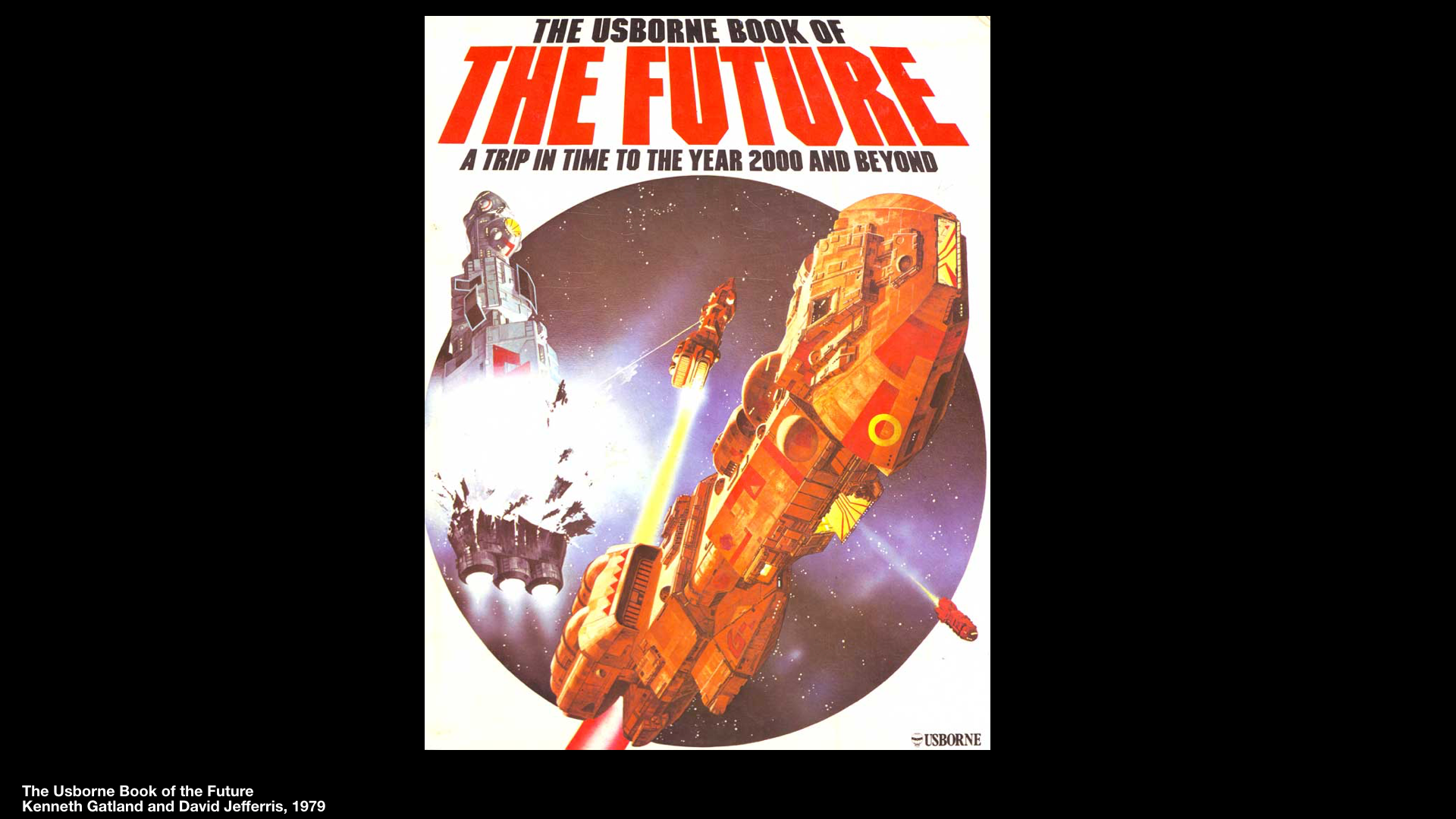

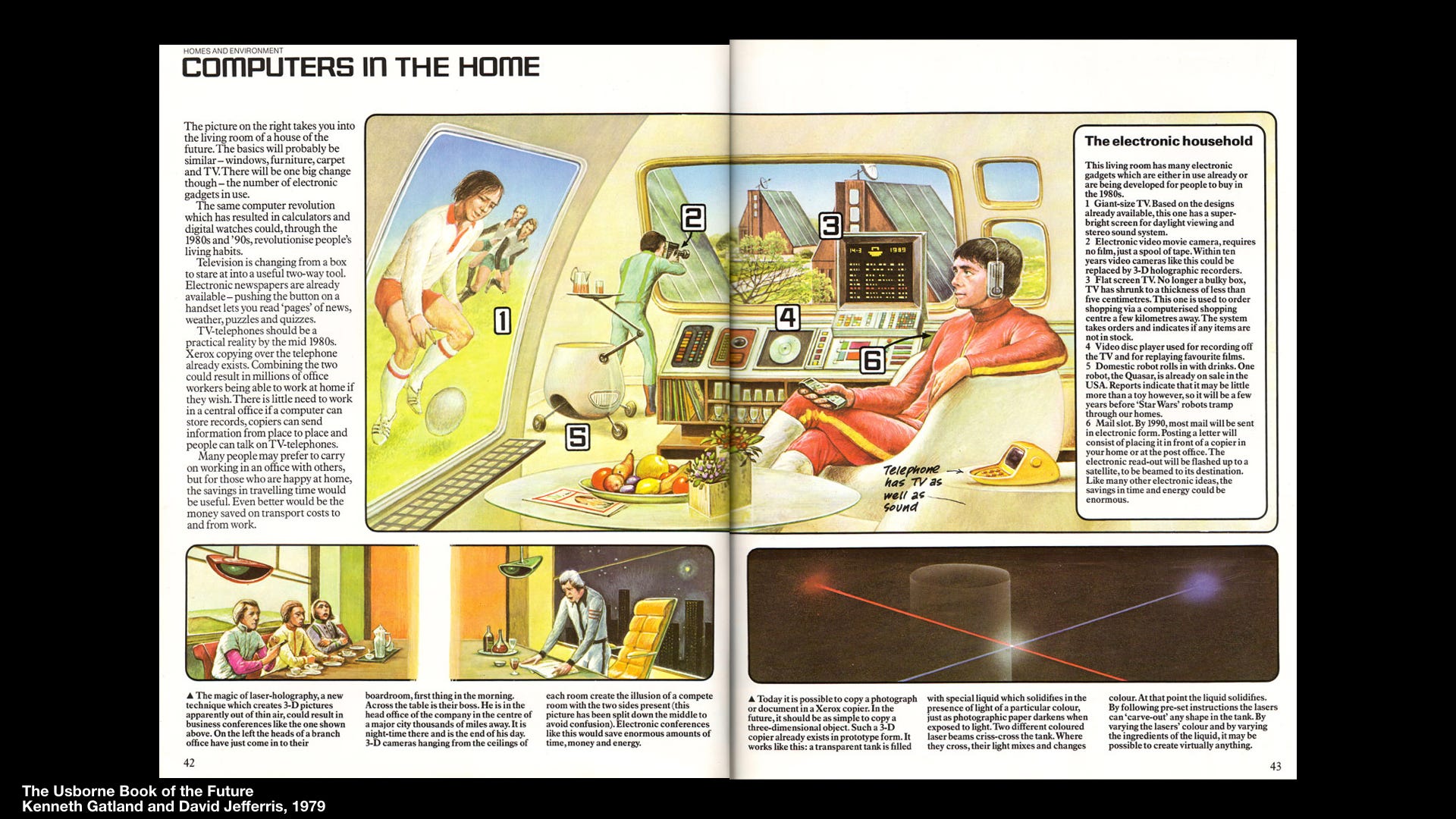

I was born in 1979, the same year that The Usborne Book of the Future was published.

The Usborne Book of the Future told an optimistic story of how humanity would use technology to solve problems and thrive.

This book would become a sort of comfort book to me: I could retreat into its pages and read about a safer, fairer, more equitable and exciting future that would be realized within my lifetime. When you’re worried about not fitting in, the promise of a future where everyone belongs is pretty alluring.

I was also incredibly lucky. As a lecturer and later a professor, my dad would borrow computers and equipment from his lab at the university to show them to his family at home.

One of my earliest memories is of him bringing home a BBC Micro and being terrified at the sound — not the graphics — of Galaxian when I was around four years old.

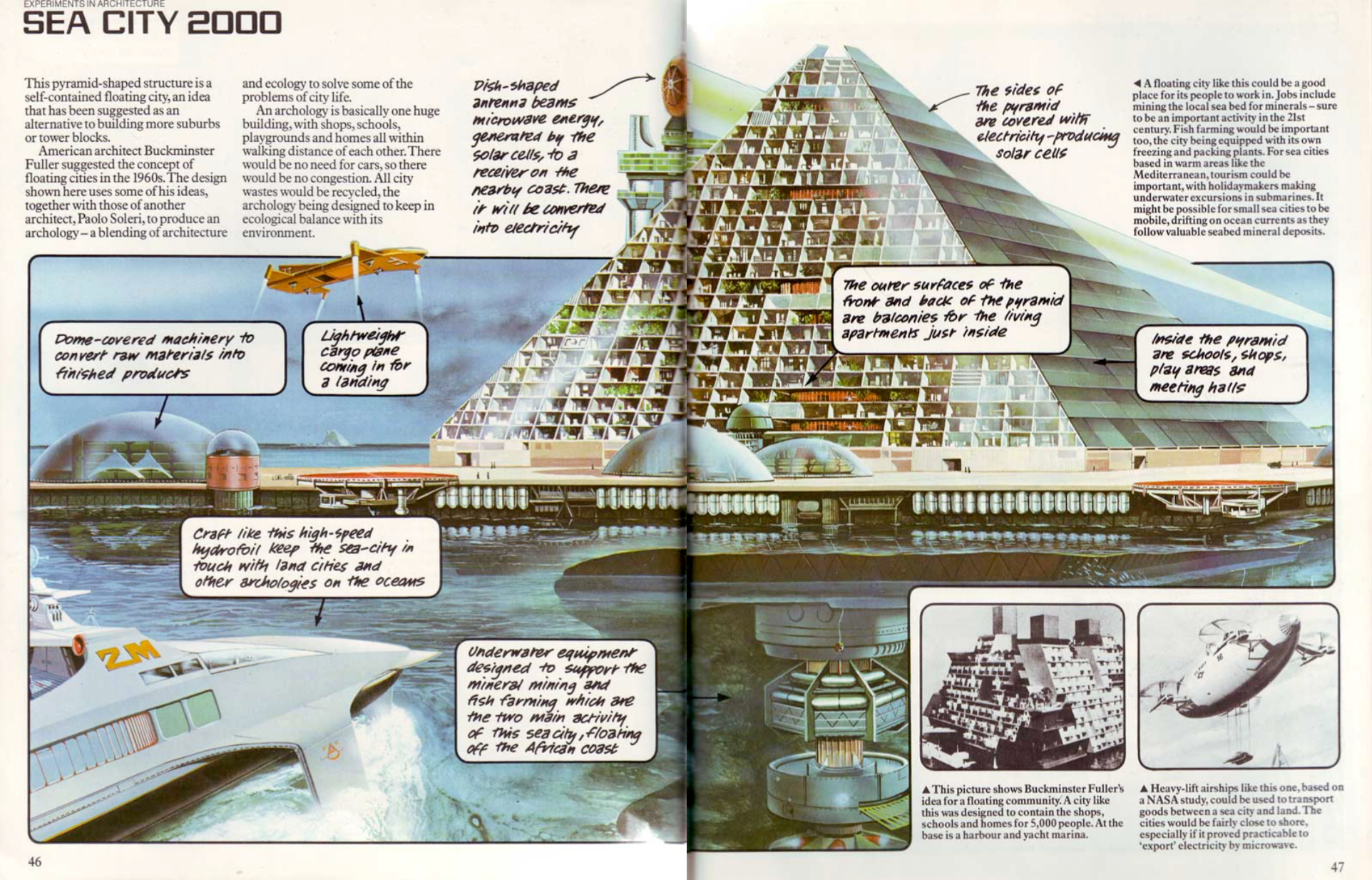

Another story: one day, in the mid 80s, my dad arrived home with a giant piece of paper, bigger than me. It was a CAD rendering of the Space Shuttle. I thought it was amazing. I thought he was amazing — he’d taken a bit of that future and brought it into our living room.

Right then, Shuttle was a symbol of a better future, tantalizingly close.

I would have been six years old.

What else?

Like many others — and many people here too, I suspect — I grew up with these paintings commissioned by NASA imagining how we’d live in space in the future:

They showed how technology would save us.

How technology and science would make everything better.

They showed a fairer future.

In that future, everyone would belong, and nobody would feel left out. Who wouldn’t want that?

(Later, I would have the experience and wisdom to look back on these images and think about everything that wasn’t in these paintings of the future, about all the people and cultures who were missing.)

For a kid growing up in the 80s and 90s, this vision of the future, of fitting in, of being accepted was seductive. Especially so for a second-generation immigrant.

And over the course of the 80s and 90s, it felt like there was a consensus forming: computers would be a massive part of our future. They’d be integral in bringing in about those visions of a fairer, more equitable future.

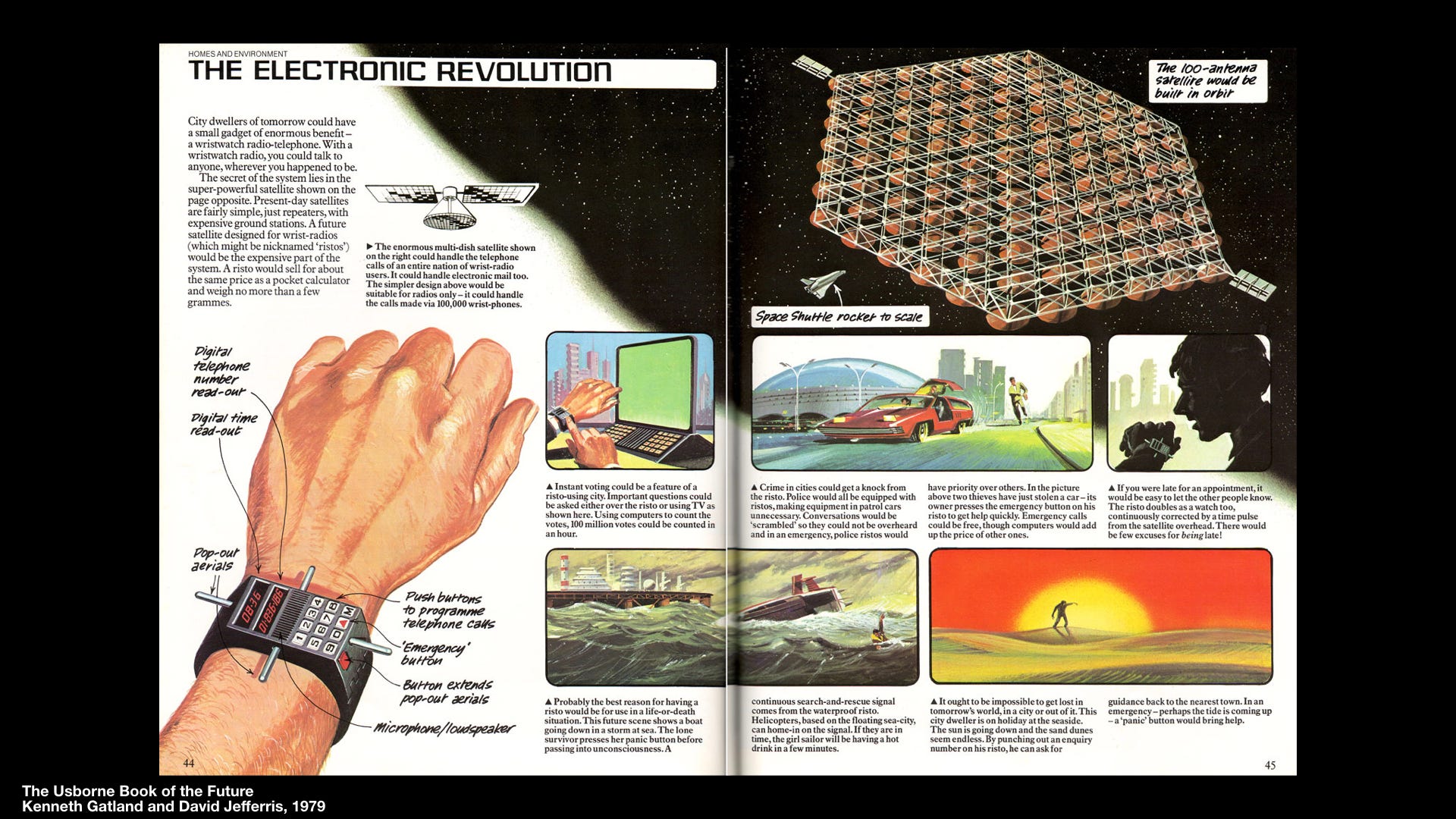

In the future, I would have my very own computer watch, complete with an emergency button I could press to summon emergency services. I have that watch, now.

By the time I got exposed to bulletin boards in the early 1990s, I was hooked.

Later, figuring out how to use my dad’s academic SLIP account in the mid 90s convinced me.

I became a believer. On the Internet, in a connected society, everyone would be equal. Everyone would be accepted. What we looked like, our gender, our meat wouldn’t matter. What was on the inside would count more than what was on the outside.

And, as I’ve spoken to friends about this, they agreed: for a brief moment in the 1990s, it was possible for a woman in technology (albeit more likely than not, a white, professional woman) to be accepted just for what she knew.

At the same time, against the growing importance and belief that the internet would be a force for good, there was my growing understanding and acceptance of the fact that we’re a tool-making, tool-using technological species.

In my talk, I made this point by using the infamous match cut from Stanley Kubrick’s 2001, of humanity’s monolith-manipulated ancestors learning to use bones as tools and jumping in time to a space-faring civilization.

I believed that our use of technology was a reason to be optimistic. That it is a reason for us to be optimistic, for we use technology to solve problems.

(I mean sure, crows do it too. But crows haven’t left our gravity well and as far as we know they don’t think digital watches are neat, so: sucks to be crows.)

All of the above is to show that I’m a technologist. I’m born of a generation, I think, that was optimistic, if not utopian and our intentions were good, even while our knowledge or experience wasn’t yet mature. In that way, I consider myself a technologist: that we create technology to solve problems, and solving those problems is part of the way to a better society for ourselves and those who come after us.

Tim O’Reilly is clearly a technologist, too. If there were any doubt, in the introduction to 2017’s Foo Camp, he stated that “technology is ultimately the solution to human problems.”

I agree.

Look: growing up, I felt like computers were amazing.

Not only did they help me do wonderful, brilliant things in the present, but I felt like I could see and was persuaded by their potential. I was — and remain convinced — that computing is an amplifier for all the beauty and sadness and for all the hope and despair of humanity.

The new media and online communities I immersed and surrounded myself in during the 90s and early 2000s led to deep, long-lasting friendships, family and children.

As a technologist, I think one of the stories we told ourselves was that networked computing would fix things. Or at the very least would improve things. That the technology would prove to be an accelerator and an amplifier of the arc of history bending toward justice.

In that respect, I think we got part of what we wanted, in that networked computing would have the best chance of fixing things if it were ubiquitous. Sometime during the last 10 years, thanks to an incredibly complicated intersection of Moore’s law, globalism, neoliberal capitalism, the military industrial complex, colonialism and some really hard work, computing got cheap enough. Network access became wireless and then pervasive enough that we hit a qualitative change.

“Enough” people have access to networked computing now, to the extent that as technologists we’re capable of making jokes about most computing power not feeling like “computers”. The trendlines of the world’s major social networks are pointing upwards and feel unstoppable. It feels inevitable that everyone will get networked, in some fashion.

But I think we’ve clearly reached a point that enough people now have access to networked computing, at any time, in any location. This is the qualitative change that I’m talking about, a sort of point of no return where networked technologies can be deployed easily enough, quickly enough that their effects aren’t just more-or-less limited to early adopter communities.

The webcam isn’t being pointed at just one coffee pot. It wants to be pointed at every coffee pot — and now, it just about can.

When enough people gained access to networked computing (available at any time, in any location) the effects that networked computing enabled didn’t just happen to a comparatively small group of technologists. They started to happen to other people, too. To everyone.

Platforms like Twitter and, later, Strava, started for small, like-minded groups. These affinity groups (a particular social group in the Bay Area, semi-pro athletes for Strava) had at least some set of shared expectations around privacy, for example. But when those platforms grow past their original audiences into wider ones (commonly propelled by growth expectations placed upon them by investment), those privacy expectations change. When Strava grew past a semi-pro athlete social network into one that’s aiming to attract amateur/casual athletes, into a more mass market, effects and outcomes qualitatively change. Those effects then have to be dealt with as platforms interact with greater segments of society.

This seems like a trite observation, but I think it’s fundamental to the psychology and culture of stereotypical west coast style technologists. And, for what it’s worth, I also think it’s applicable to people in general. The ability to systemically affect entire societies in such short timespans isn’t something that I believe we’re intuitively geared up to deal with without very, very deliberately engaging Kahneman’s System 2.

And yet everything isn’t better.

Sure, some things are better. Networked, global neoliberal capitalism has certainly and undeniably lifted many out of poverty. But at the same time, the world we’re living in isn’t the drastically improved society of people living in harmony I was promised as a child.

The 2017 my children and I live in is a messy, human future. One where we talk about the inadvertent algorithmic cruelty of being awkwardly reminded to celebrate your child’s death.

Where people worry about the energy expenditure of a cryptocurrency.

My talk was originally written for a select gathering (to be clear, Foo Camp has been criticised, and rightly so, as being problematic in terms of inclusivity and diversity) of influential technologists worried that we need to make smarter choices about technology and its effects on our economy.

But I’m worried that just thinking about technology and our economy isn’t going to help.

If technology is the solution to human problems, we need to do the human work to figure out and agree what our problems are and the kind of society we want. Then we can figure out what technology we want and need to bring about the society we want. Otherwise we’re back to a coordination problem: which problems, what ones?

In my talk I put forward the suspicion that many of the technologists gathered in the room weren’t attending just because smarter decisions needed to be made with regard to considered technology and the future of the economy. I suspected that many were in the room because, at the end of the day, everything wasn’t going quite as well as we thought or wished.

Because the better future promised by the books we grew up with didn’t automatically happen when enough people got connected.

Because we know we need to do better.

Because inequality is rising, not decreasing.

Because of what feels like rising incidences of hate and abuse, like gamergate.

Because of algorithmic cruelty.

Because of YouTube and Logan Paul and YouTube and child exploitation.

Because right now, it feels like things are getting worse.

Here’s what I think.

I think we need to figure out what society we want first. The future we want. And then we figure out the technology, the tools, that will get us there.

Because if we’re a tool-making, tool-using species that uses technology to solve human problems, then the real question is this: what problems shall we choose to solve?

In my talk, I explored just one question that I think will help us in that journey.

I think we need to question our gods.

By gods, I mean the laws, thinking, aphorisms and habits that we as technologists assume to be true and that appear to guide development of technology.

I’ve got two examples.

The first is Metcalfe’s Law. The Metcalfe is Robert Metcalfe, a co-inventor of Ethernet, a stupendously successful networking standard. You wouldn’t be reading this, in this way, were it not for Metcalfe.

Metcalfe’s law says that the value of a telecommunications network is proportional to the square of the number of connected users of the system. Most technologists know of this law, or a version of it.

One of the ways people think about and illustrate Metcalfe’s Law is by understanding that a telephone or fax machine isn’t particularly useful on its own (the examples go to show how comparatively old the law is), but that two are much more useful. Four even more so, and so on. These understandings are a bit like folk understandings.

Lately, Metcalfe’s law has been applied to social networks and not just to equipment that’s connected together. Applying Metcalfe’s law to social networks is a sort of folk understanding that the more users your social network has, the more valuable it is. If you value value, then, the law points you in the direction of increasing the number of your users.

The problem is, I like to ask dumb questions. Asking dumb questions has served me well in my career. Asking dumb questions in this case means I have two simple questions about the law:

First: why do we call Metcalfe’s law a law? Has it been proven to be true?

Second: What do we mean, exactly, by value?

Now, it turns out Bob Metcalfe did his homework. In 2013, he published a paper (“Metcalfe’s Law after 40 Years of Ethernet”) testing his law in response to critics who had called it “a gross overestimation of the network effect”. Metcalfe found that his theory (remember: not a law) was true.

So, how did Metcalfe define “value” in his paper?

Metcalfe used Facebook’s revenue as a definition of value and found that it fit his law.

Revenue is, of course, just one way of measuring value. One could drive a truck through all the things that revenue doesn’t represent that individuals, groups or whole societies might choose to value.

For example: does revenue reflect the potential or actual value of harm done in allowing (intentionally or inadvertently) foreign interference in a democratic nation state’s elections? The week I presented this talk, representatives of Facebook and Twitter were testifying to the U.S. Senate Judiciary Subcommittee on Crime and Terrorism in Washington, D.C.

Does revenue also reflect the value of harm done to society in both allowing and signalling that allowing advertising that breaks housing discrimination laws is acceptable, a year after being notified?

It doesn’t.

So maybe revenue isn’t the only mechanism through which we can measure value.

Maybe there are a whole bunch of other things that networks do that we want to pay attention to that might be important or, I guess, valuable.

Maybe networks don’t automatically, positively, affect those values.

Being more deliberate about what we choose to value as a society and whether some measures (e.g. revenue) are able to sufficiently proxy others is part of a current, wider discussion. For example: should the economic value of breast milk be included in when calculating gross domestic product?

Over the last couple hundred years, our dominant cultures have done a terrible job of considering negative externalities, the things that we don’t see or choose not to measure when we think about how systems work. There’s an argument one could make that the now dominant global, networked capitalism model incentivizes only certain methods of valuation.

Of the more recent examples, it may already be too late for us to effectively deal with climate change.

Perhaps it’s time for us to retire the belief — because that’s what it is, just a belief — that networks with large numbers of users without other attributes are an unqualified good.

Writer’s note: John Perry Barlow passed away aged 71 on 7 February 2018. Without a doubt, Barlow has been incredibly influential in the development of culture and thinking of the networked world. And while there’s great recognition of what he got right, he had blind spots, too.

My second example comes back to John Perry Barlow’s 1996 manifesto and declaration of the independence of cyberspace.

In his manifesto, Barlow (excitedly and optimistically) proclaimed that cyberspace presented an opportunity for new social contracts to be negotiated by individuals that would identify and address wrongs and conflicts.

In 2016, The Economist asked Barlow for reflections on his manifesto with the benefit of twenty years of hindsight.

First, he says that he would’ve been a bit more humble about the “Citizens of Cyberspace” creating social contracts to deal with bad behavior online. In the early, heady rush of the young internet, where an accident of starting conditions meant it was easy to find certain like-minded people, it was easy to think that “smart” people would build a new, equitable society.

We know now in retrospect that new social contracts weren’t created to effectively deal with bad behavior. Some people may have been warning that this wasn’t particularly likely for quite a while.

While I admit that I’m cherry picking Barlow’s quotes here, there’s one in particular that I want to highlight:

When Barlow said “the fact remains that there is not much one can do about bad behavior online except to take faith that the vast majority of what goes on there is not bad behavior,” his position was that we should accept the current state of affairs because there is literally no room for improvement.

At this point in delivering my talk, I broke out a swear word between “citation” and “needed” because, to be completely candid, I was angry.

Saying “there isn’t anything better we can do” isn’t how society works.

It isn’t how civilization works.

It isn’t how people work together and protect those amongst us who cannot protect themselves.

It is a little bit like saying on the one hand that the condition underlying human existence is nasty, brutish and short, and on the other, writing off any progress humanity has made to make our lives less nasty, kinder and longer.

In my opinion, Barlow’s opinions on online behavior, given his standing and influence were irresponsible.

Saying “we can do nothing” is what America says in response to the latest mass shooting when every other civilized country is able to regulate the responsible ownership of firearms.

Saying “we can do nothing” is like saying it’s not worth having laws or standards because we can’t achieve perfection.

We would do better to be clear: is it true that we can do nothing? Or is it true that we choose to do nothing? (In a way, this highlights to me the bait-and-switch with a belief that machine learning or AI will solve fundamental problems for us. Ultimately, these choices are ours to make.)

Barlow’s opinion needs to be seen in context and helpfully leads me to my final points. Not because we’re technologists, but because we’re people, we’re responsible to society for the tools we make.

I suggest that for a number of reasons — one of them being that technology wasn’t yet pervasive amongst society — technologists in general (and recently and in particular, the strain of technology centered around the West Coast of the United States), have operated on general idea that as technologists we’re apart from society.

That, like scientists, we discover and invent new things and then it’s up to “them” — society, government, and so on — to figure out and deal with the implications and results.

This argument isn’t entirely wrong: it is society and our government’s job to “figure out” and “deal with” the implications and results of technology, but it’s disingenuous of the technological class (and to be more accurate, its flag-wavers and leaders) to sidestep participation and responsiblity.

In other words: nobody, not even technologists, is apart from society.

Society is all of us, and we all have a responsibility to it.

Getting from here to there

This is all very well and good. But what can we do? And more precisely, what “we”? There’s increasing acceptance of the reality that the world we live in is intersectional and we all play different and simultaneous roles in our lives. The society of “we” includes technologists who have a chance of affecting the products and services, it includes customers and users, it includes residents and citizens.

I’ve made this case above, but I feel it’s important enough to make again: at a high level, I believe that we need to:

- Clearly decide what kind of society we want; and then

- Design and deliver the technologies that forever get us closer to achieving that desired society.

This work is hard and, arguably, will never be completed. It necessarily involves compromise. Attitudes, beliefs and what’s considered just changes over time.

That said, the above are two high level goals, but what can people do right now? What can we do tactically?

What we can do now

I have two questions that I think can be helpful in guiding our present actions, in whatever capacity we might find ourselves.

For all of us: What would it look like, and how might our societies be different, if technology were better aligned to society’s interests?

At the most general level, we are all members of a society, embedded in existing governing structures. It certainly feels like in the recent past, those governing structures are coming under increasing strain, and part of the blame is being laid at the feet of technology.

One of the most important things we can do collectively is to produce clarity and prioritization where we can. Only by being clearer and more intentional about the kind of society we want and accepting what that means, can our societies and their institutions provide guidance and leadership to technology.

These are questions that cannot and should not be left to technologists alone. Advances in technology mean that encryption is a societal issue. Content moderation and censorship are a societal issue. Ultimately, it should be for governments (of the people, by the people) to set expectations and standards at the societal level, not organizations accountable only to a board of directors and shareholders.

But to do this, our governing institutions will need to evolve and improve. It is easier, and faster, for platforms now to react to changing social mores. For example, platforms are responding in reaction to society’s reaction to “AI-generated fake porn” faster than governing and enforcing institutions.

Prioritizations may necessarily involve compromise, too: the world is not so simple, and we are not so lucky, that it can be easily and always divided into A or B, or good or not-good.

Some of my perspective in this area is reflective of the schism American politics is currently experiencing. In a very real way, America, my adoptive country of residence, is having to grapple with revisiting the idea of what America is for. The same is happening in my country of birth with the decision to leave the European Union.

These are fundamental issues. Technologists, as members of society, have a point of view on them. But in the way that post-enlightenment governing institutions were set up to protect against asymmetric distribution of power, technology leaders must recognize that their platforms are now an undeniable, powerful influence on society.

As a society, we must do the work to have a point of view. What does responsible technology look like?

For technologists: How can we be humane and advance the goals of our society?

As technologists, we can be excited about re-inventing approaches from first principles. We must resist that impulse here, because there are things that we can do now, that we can learn now, from other professions, industries and areas to apply to our own. For example:

- We are better and stronger when we are together than when we are apart. If you’re a technologist, consider this question: what are the pros and cons of unionizing? As the product of a linked network, consider the question: what is gained and who gains from preventing humans from linking up in this way?

- Just as we create design patterns that are best practices, there are also those that represent undesired patterns from our society’s point of view known as dark patterns. We should familiarise ourselves with them and each work to understand why and when they’re used and why their usage is contrary to the ideals of our society.

- We can do a better job of advocating for and doing research to better understand the problems we seek to solve, the context in which those problems exist and the impact of those problems. Only through disciplines like research can we discover in the design phase — instead of in production, when our work can affect millions — negative externalities or unintended consequences that we genuinely and unintentionally may have missed.

- We must compassionately accept the reality that our work has real effects, good and bad. We can wish that bad outcomes don’t happen, but bad outcomes will always happen because life is unpredictable. The question is what we do when bad things happen, and whether and how we take responsibility for those results. For example, Twitter’s leadership must make clear what behaviour it considers acceptable, and do the work to be clear and consistent without dodging the issue.

- In America especially, technologists must face the issue of free speech head-on without avoiding its necessary implications. I suggest that one of the problems culturally American technology companies (i.e., companies that seek to emulate American culture) face can be explained in software terms. To use agile user story terminology, the problem may be due to focusing on a specific requirement (“free speech”) rather than the full user story (“As a user, I need freedom of speech, so that I and those who come after me can pursue life, liberty and happiness”). Free speech is a means to an end, not an end, and accepting that free speech is a means involves the hard work of considering and taking a clear, understandable position as to what ends.

- We have been warned. Academics — in particular, sociologists, philosophers, historians, psychologists and anthropologists — have been warning of issues such as large-scale societal effects for years. Those warnings have, bluntly, been ignored. In the worst cases, those same academics have been accused of not helping to solve the problem. Moving on from the past, is there not something that we technologists can learn? My intuition is that post the 2016 American election, middle-class technologists are now afraid. We’re all in this together. Academics are reaching out, have been reaching out. We have nothing to lose but our own shame.

- Repeat to ourselves: some problems don’t have fully technological solutions. Some problems can’t just be solved by changing infrastructure. Who else might help with a problem? What other approaches might be needed as well?

There’s no one coming. It’s up to us.

My final point is this: no one will tell us or give us permission to do these things. There is no higher organizing power working to put systemic changes in place. There is no top-down way of nudging the arc of technology toward one better aligned with humanity.

It starts with all of us.

Afterword

I’ve been working on the bigger themes behind this talk since …, and an invitation to 2017’s Foo Camp was a good opportunity to try to clarify and improve my thinking so that it could fit into a five minute lightning talk. It also helped that Foo Camp has the kind of (small, hand-picked — again, for good and ill) influential audience who would be a good litmus test for the quality of my argument, and would be instrumental in taking on and spreading the ideas.

In the end, though, I nearly didn’t do this talk at all.

Around 6:15pm on Saturday night, just over an hour before the lightning talks were due to start, after the unconference’s sessions had finished and just before dinner, I burst into tears talking to a friend.

While I won’t break the societal convention of confidentiality that helps an event like Foo Camp be productive, I’ll share this: the world felt too broken.

Specifically, the world felt broken like this: I had the benefit of growing up as a middle-class educated individual (albeit, not white) who believed he could trust that institutions were a) capable and b) would do the right thing. I now live in a country where a) the capability of those institutions has consistently eroded over time, and b) those institutions are now being systematically dismantled, to add insult to injury.

In other words, I was left with the feeling that there’s nothing left but ourselves.

Do you want the poisonous lead removed from your water supply? Your best bet is to try to do it yourself.

Do you want a better school for your children? Your best bet is to start it.

Do you want a policing policy that genuinely rehabilitates rather than punishes? Your best bet is to…

And it’s just. Too. Much.

Over the course of the next few days, I managed to turn my outlook around.

The answer, of course, is that it is too much for one person.

But it isn’t too much for all of us.

Notes

Ideas don’t come into being on their own. Here are some of the people who have influenced me and some of the reading that I’ve built upon when putting together my thoughts.

Some people

If you’re interested in following people who produce some of the raw material that I used to put together this talk, you should follow:

- Ingrid Burrington

- Maciej Cegłowski, Tech Solidarity

- Tim Carmody

- Rachel Coldicutt

- Debbie Chachra

- Mar Hicks

- Stacy-Marie Ishmael

- Sarah Jeong

- Alexis Madrigal

- Eric Meyer

- Tim Maughan

- Safiya Nobel

- Mimi Onuoha

- Jay Owens

- Fred Scharmen

- Jay Springett, Stacktivism

- Zeynep Tufecki

- Sara Wachter-Boettcher

- Georgina Voss

- Damien Williams

- Rick Webb

Some reading

- Something is wrong on the internet, James Bridle

- Maciej Cegłowski, talks

- How do you solve a problem like technology? A systems approach to regulation, Rachel Coldicutt

- The tech industry needs a moral compass, Rachel Coldicutt

- Radical Technologies, Adam Greenfield

- What Facebook Did to American Democracy, Alexis Madrigal

- The year we wanted the internet to be smaller, Kaitlyn Tiffany

- Don’t Be Evil: Fred Turner on Utopias, Frontiers, and Brogrammers, Fred Turner, Logic Magazine

- Technically Wrong, Sara Wachter-Boettcher

- My Internet Mea Culpa: I’m Sorry I Was Wrong, We All Were*, Rick Webb

With thanks to

- Farrah Bostic

- Debbie Chachra

- Tom Carden

- Heather Champ

- Dana Chisnell

- Tom Coates

- Blaine Cook

- Rachel Coldicutt

- Clearleft and the Juvet A.I. Retreat attendees

- Warren Ellis

- Cyd Harrell

- Dan Hill

- Jen Pahlka

- Matt Jones

- Derek Powazek

- Robin Ray

- Nora Ryan

- Matt Webb