Writing with AI

When ChatGPT came out one year ago, we wanted to know whether and how it could be used for writing. We put it to the test and came up with a careful answer.

This article is part two of a series about the history, reason, and the design of iA Writer 7, our cautious response to AI. In this article, we answer the following five questions:

- How good is AI for writing?

- When is AI useful for writing, when is it not?

- When is it right and when is it wrong to use AI?

- What is the problem?

- What should we design?

You heard it: AI will save the world. AI will destroy the world. AI will become conscious. AI is dumb. AI is genius. AI is starting. AI is over. Some hope that AI will become a robot God, others predict its entropic death. AI is exhausting. If you work in tech, you can’t ignore it. Unfortunately, not every hype is just hype.1 So what’s going on? How good is AI for writing, really?

1. How good is AI for writing?

It’s not that good

It is plausible that AI will improve, but no one knows what the future brings. Right now, if you have higher expectations than just getting writing done one way or the other, writing with ChatGPT is not that good.

Observing and experimenting with AI, we were not the only ones to find both pleasure and pain, benefits and downsides in AI-driven writing.2 Some of the dangers like hallucinations and false logic are observable and easily reproducible. Others like loss of voice and common trust in writing are long-term issues, and some plausible traps are somewhat speculative. Currently, it is not that good in terms of:

- Quality: By itself, it makes a lot of mistakes and thus needs careful supervision to stay accurate and meaningful

- Voice: Letting a machine speak in your place makes you sound and feel like a tool

- Satisfaction: Having a robot ghost write for you makes you learn, grow, and achieve as much as winning in Chess with a computer

And it’s not that good morally either: Why should someone bother to read what you didn’t bother to write?

It’s not that bad either

AI can write code, articles, books, emails, and it can do math. Sometimes it’s accurate, sometimes it makes up stuff. It always needs supervision. Left to its own devices, it can make the most ridiculous mistakes and make you look like a complete fool.

It’s both shockingly bad and shockingly good at explaining difficult matters. ChatGPT can explain Kant, the Relativity Theory and it can translate Cuneiform documents at a frightening speed. Mind you: You can not trust it. It has no conscience in any way. It does not understand what it does, and it does not know what is good or bad.

But if you get stuck reading Aristotle’s Metaphysics in ancient Greek, it can clear things up. And even—well, especially—when it’s wrong, it can give you a new perspective. It’s a great learning and dialogue partner. 3 In spite of all of its downsides, AI is cheap, fast, and painless.

Killer quality convenience

Despite the incessant hype, fear and hysteria, using AI for writing comes with a killer quality: convenience. It writes faster, clearer, and with fewer typos than most high school students. It’s comparable to early chess computers that quickly beat average players but took some time to reach the grand master level. When you look at how technology evolved over the last 20 years, you know that convenience is the key factor of success.4

And due to its convenience, we believe that using AI for writing will likely become as common as using dishwashers, spellcheckers, and pocket calculators. The question is: How will it be used? Like spell checkers, dishwashers, chess computers and pocket calculators, writing with AI will be tied to varying rules in different settings.5

In order to cope with this new reality for writing we needed to observe and think through the new writing process. We needed to test it up and down, left and right, back and forth, copy and paste, recopy and paste back, through and through.

2. When is AI useful for writing, when is it not?

Not useful: Think less

AI turns thoughtful tasks into thoughtless ones. Not thinking is a recipe for disaster. Independent of how AI evolves, letting AI write in our place is a Sci-Fi catastrophe classic.

Thinking less where thinking is key, is not advisable, unless you delegate it to another better-thinking person you trust. And yet. In our initial assessment we were missing a key benefit. The true benefit of writing with AI would only reveal itself through intense usage.

Writing is not about getting letters on a page. It’s not about getting done with text. It’s finding a clear and simple expression for what we feel, mean, and want to express. Writing is thinking with letters. Usually we do this alone. With AI, you write in dialogue. It comes with a chat-interface, after all. So, don’t just write commands, talk to it.

Useful: Think more

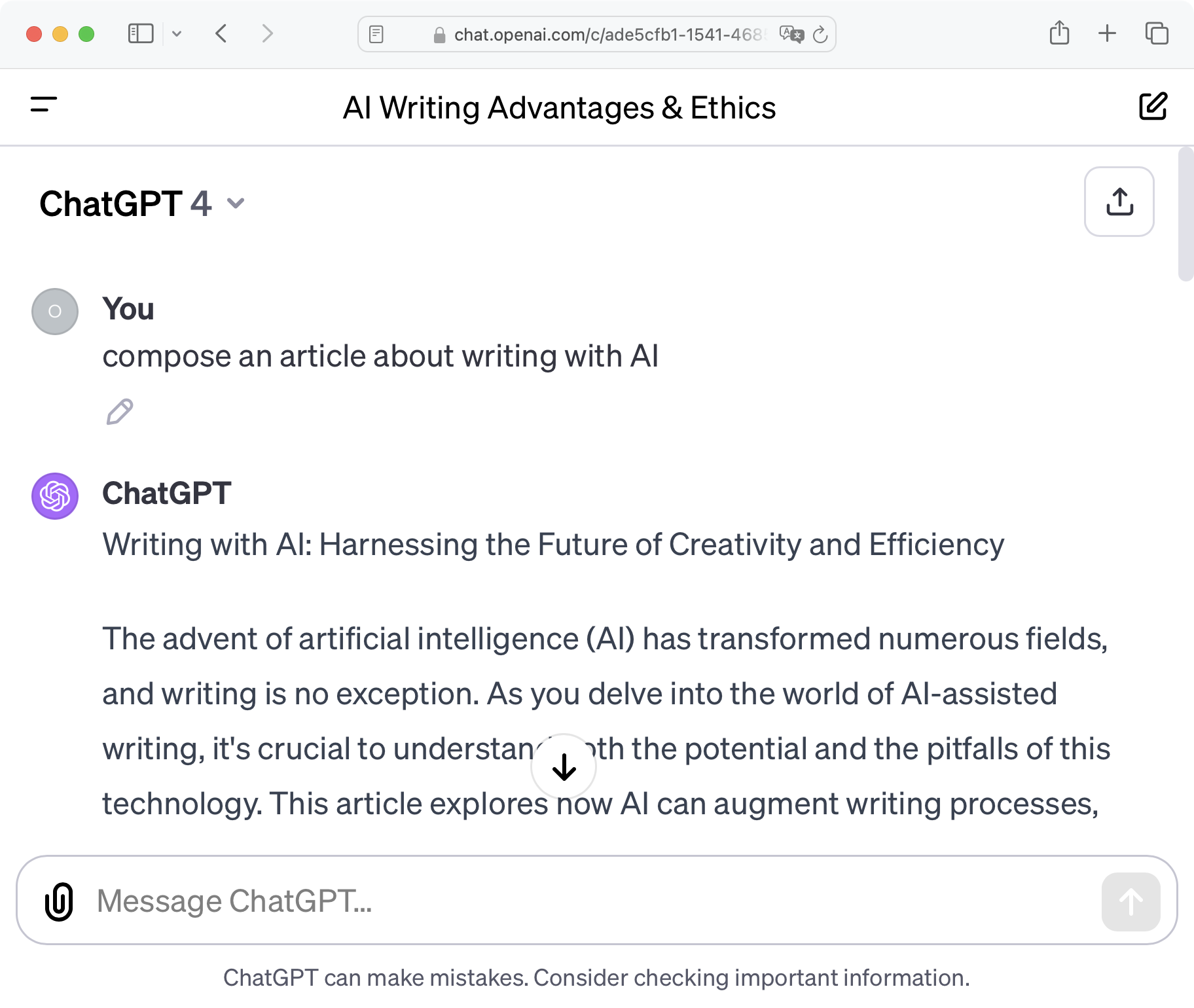

Writing in dialogue with an artificial companion was unexpected, inspiring and exciting. Using AI in dialog over a writing task was a truly new experience. Using AI as a dialogue partner during preparation, when stuck, and in editing proved surprisingly useful. And that thing is patient like no one else! It never gets tired of you.

Thinking in dialogue is easier and more entertaining than struggling with feelings, letters, grammar and style all by ourselves. Using AI as a writing dialogue partner, ChatGPT can become a catalyst for clarifying what we want to say. Even if it is wrong.6 Sometimes we need to hear what’s wrong to understand what’s right.

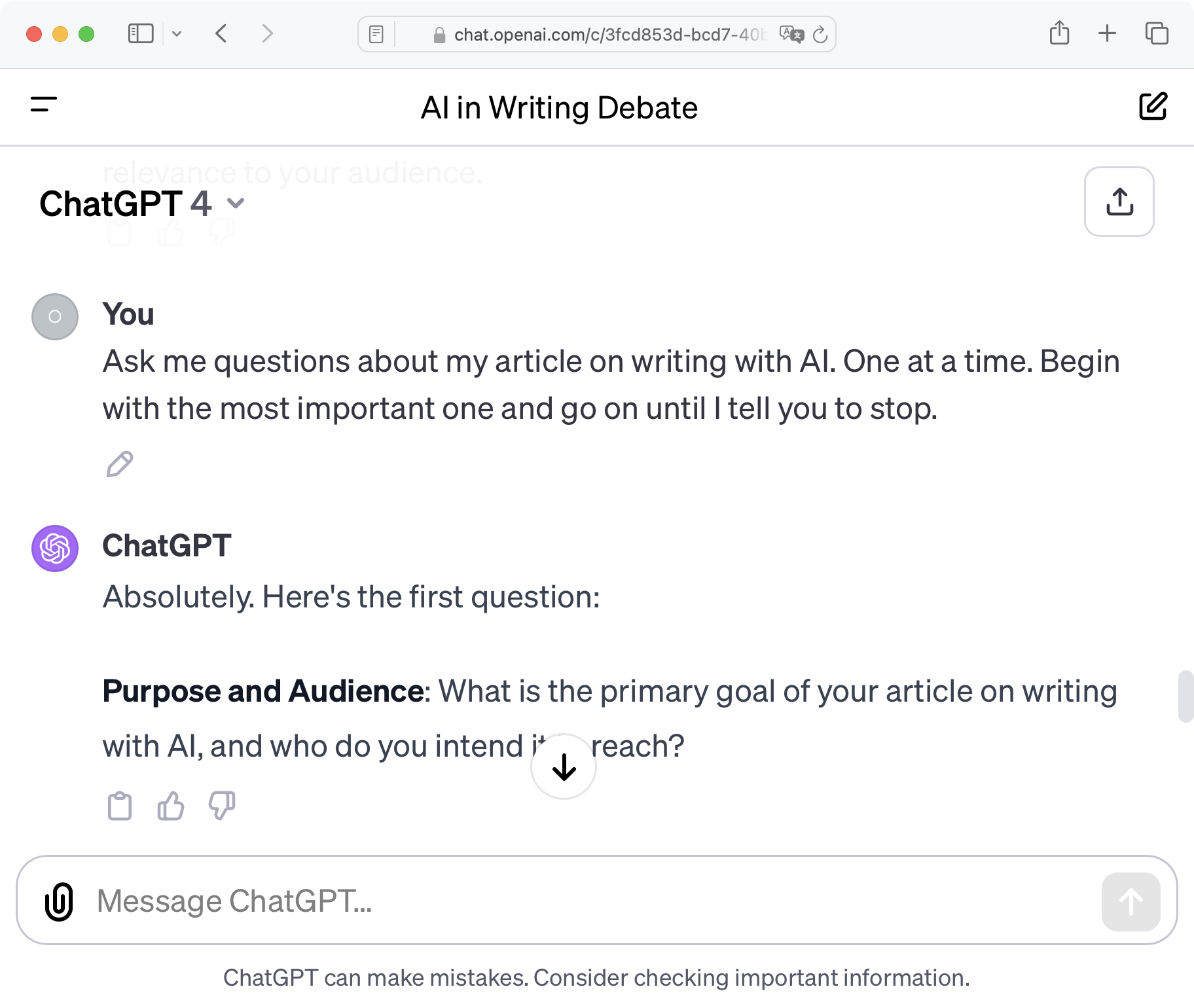

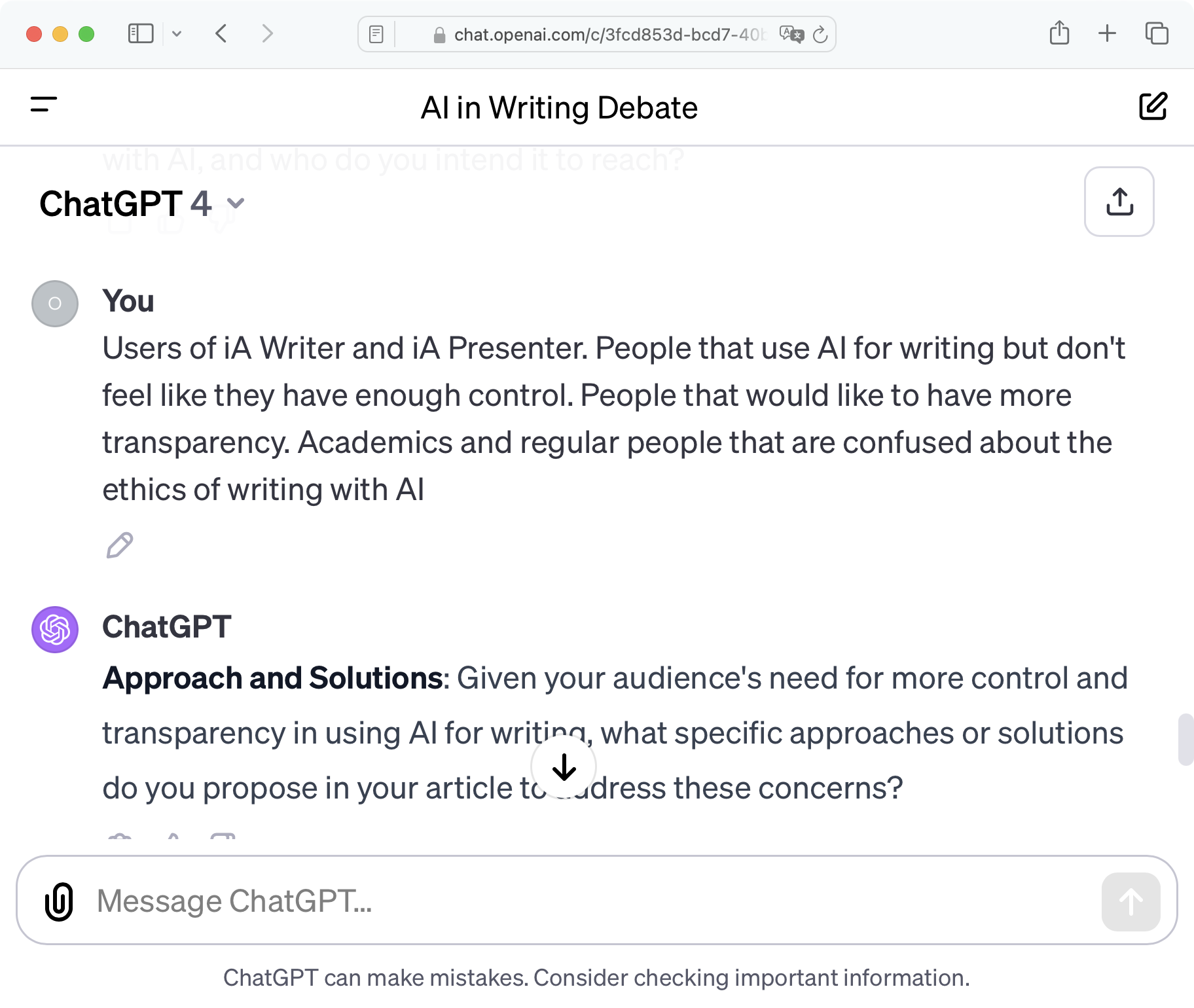

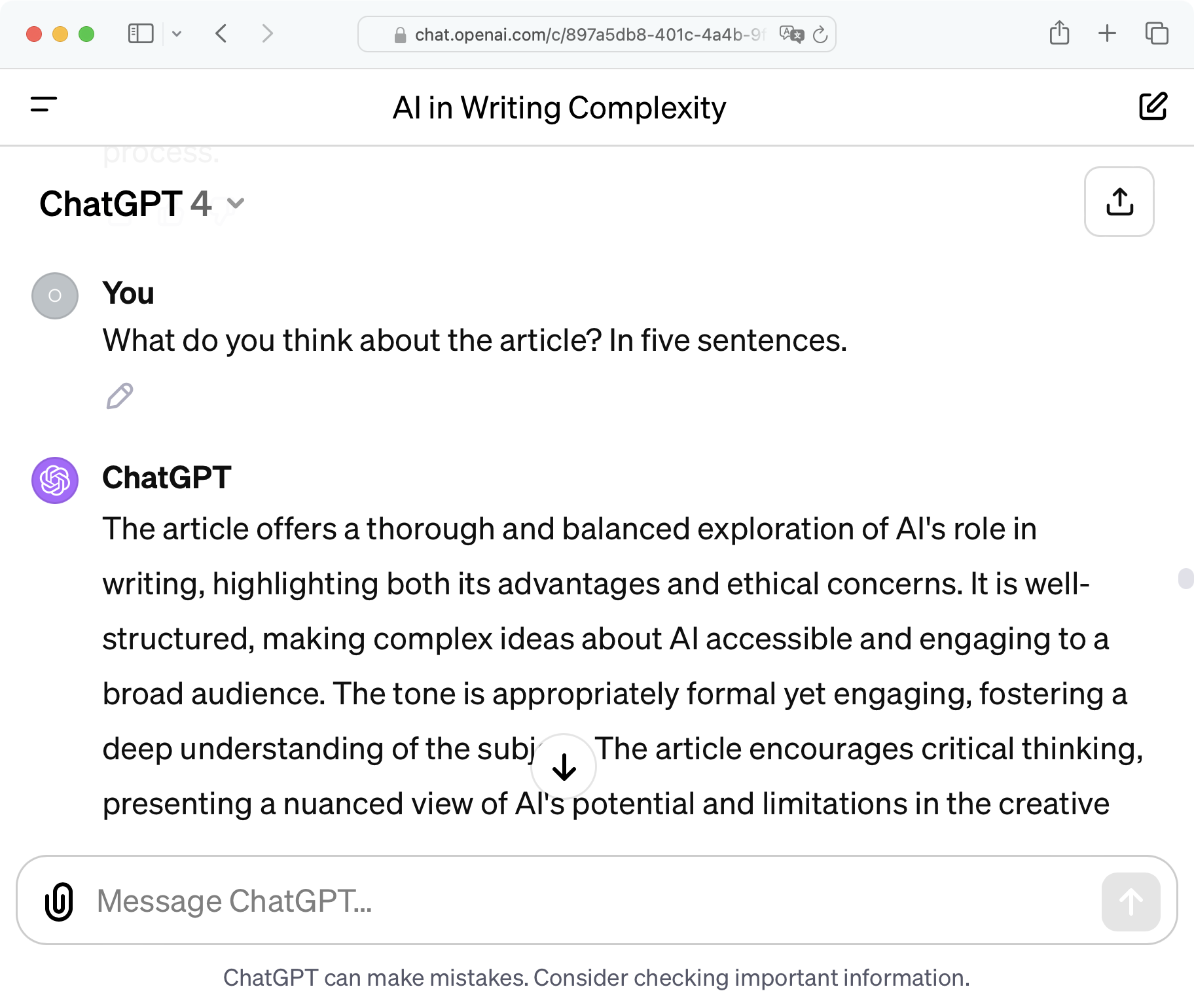

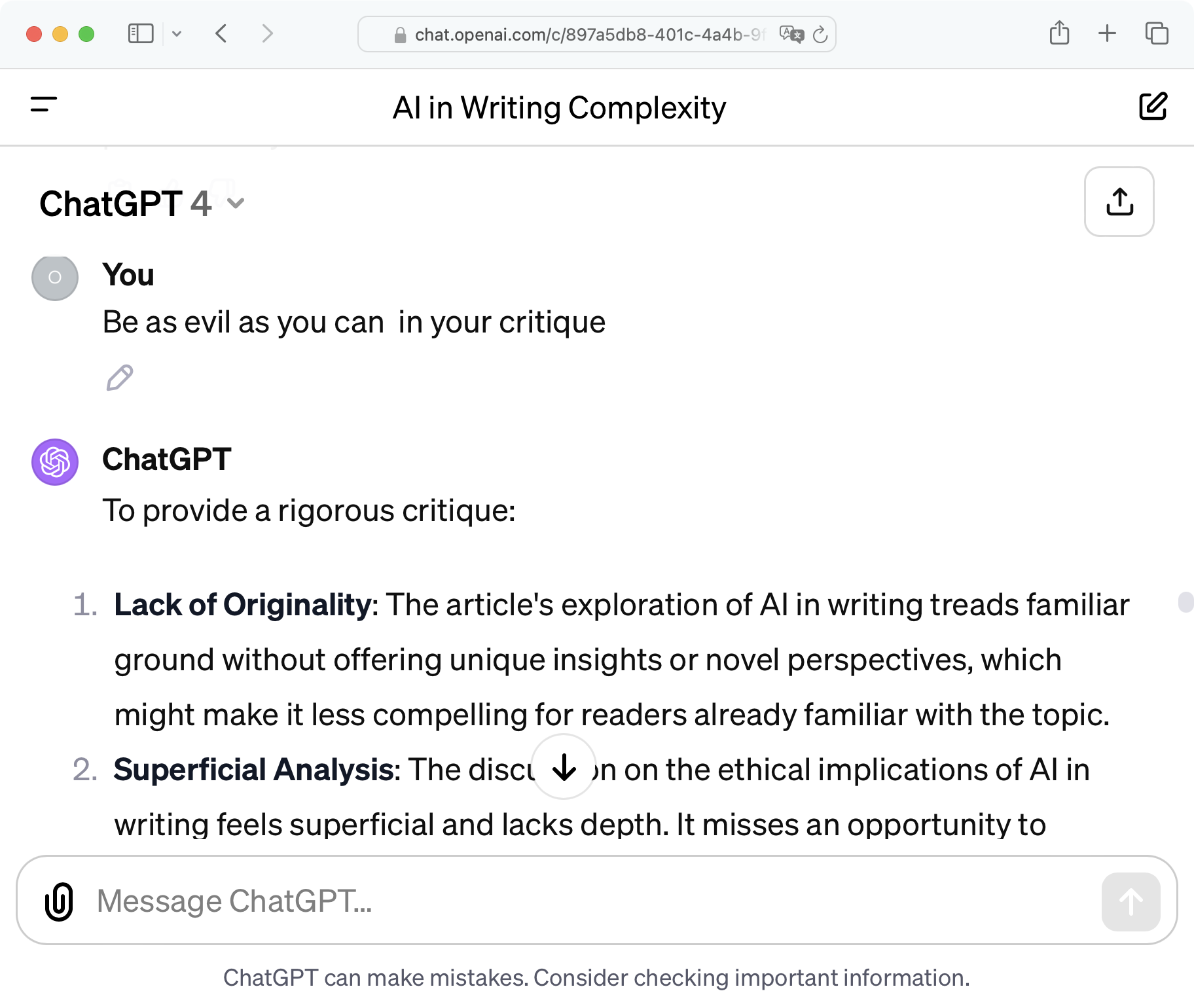

AI often makes a lot of factual and logical mistakes. Mistakes, if identified, can help you think. Seeing in clear text what is wrong or, at least, what we don’t mean can help us set our minds straight about what we really mean. If you get stuck, you can also simply let it ask you questions. If you don’t know how to improve, you can tell it to be evil in its critique of your writing:

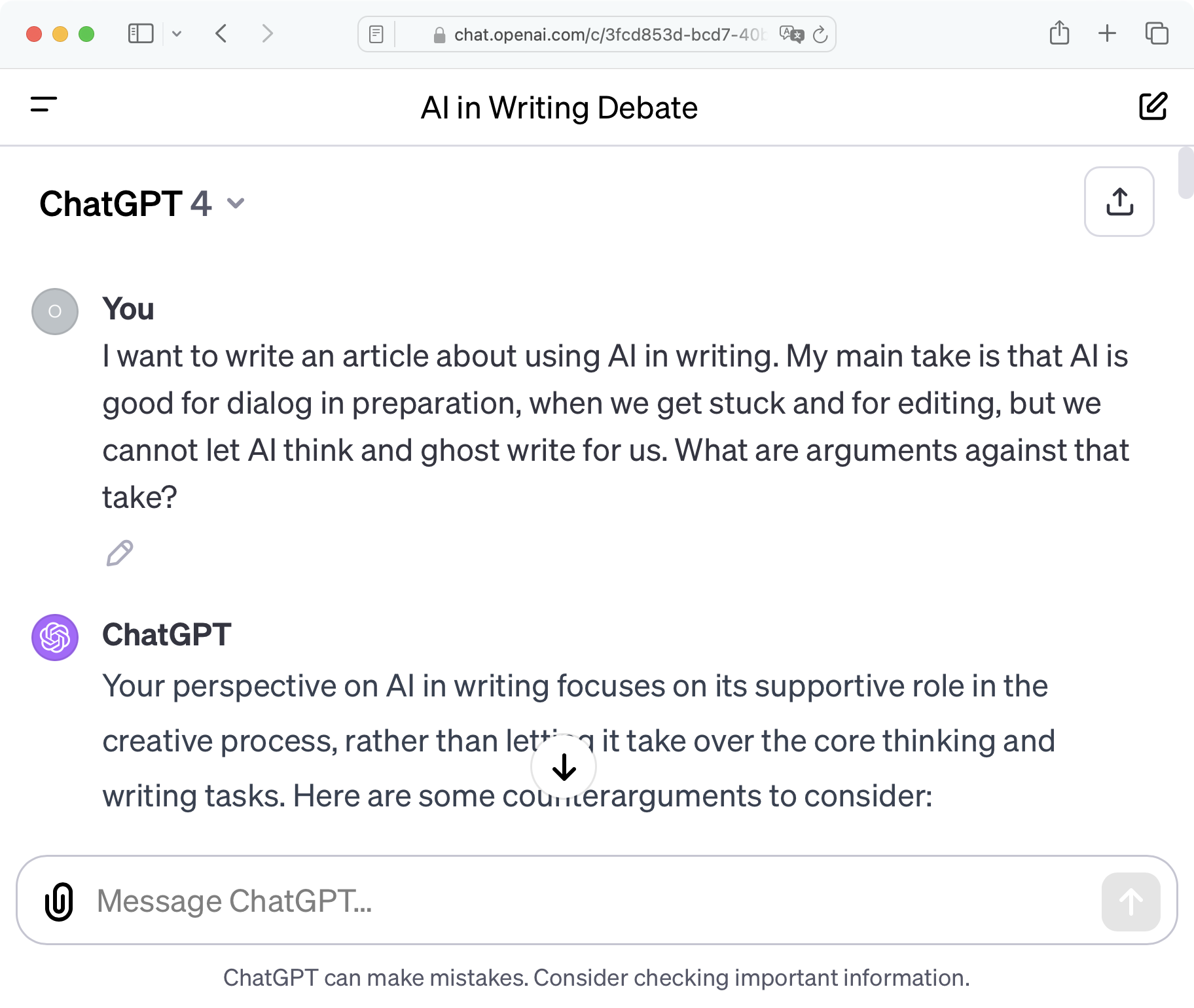

AI can and will ruin your voice and credibility if you lazily let it write in your place. As writers we can not allow AI to replace our own thinking. We should use it to simulate the thinking of a missing dialogue partner. To write better, we need to think more, not less.

3. When is it right and when is it wrong to use AI?

When it comes to morals, each and everyone sees things a bit differently. But then again, most people agree on the very basics of good and bad. Most people around the globe will agree that stealing, lying and killing are bad habits. Is there anything that corresponds to stealing, lying and killing in writing?

It’s a spectrum

Let’s look at some examples. Ask yourself how you judge someone who uses AI on the following spectrum, as:

- A discussion partner to prepare writing

- A spellchecker, grammar checker to fix technical errors

- A fact checker, to get rid of overlooked factual errors

- A friend that helps out when you can’t find the right word that is on the tip of your tongue

- A partial ghostwriter

- A full-on ghostwriter, but then you check what was written

- A full-on ghostwriter without checking what was written

- A full-on ghost reader and ghostwriter without checks and balances

It’s not easy to judge the very top and the very end of the list. Likely, different people will have different ethical standards in the middle part. Some will have different standards for themselves and for others and have no problem with that. In other words, at the extremes it’s clear, in the middle it’s up to us. There are limits to what is right and wrong.

Extremes and grey zone

It’s not that hard to identify which usage is generally sound and which is not. Just compare usage with AI to how we dealt with similar issues before AI.

- Discussing our writing with others is a general practice and regarded as universally helpful; honest writers honor and credit their discussion partners

- We already use spell checkers and grammar tools

- It’s common practice to use human editors for substantial or minor copy editing of our public writing

- Clearly, using dictionaries and thesauri to find the right expression is not a crime

Letting someone else write part of our work is where it gets complicated. And if you look at the way that quoting works in academic writing, quoting a single expression you did not come up with by yourself is, academically speaking, unacceptable. Even transcribing a thought that you owe to another person is not allowed. Outside of academic writing, it’s up to us as writers to decide how much artificiality we are comfortable using and how transparent we want to be about it.

4. What is the problem?

Entropy: Quoting quoted quotes

You don’t need to be an academic to see that we shouldn’t pretend to know what we don’t understand, say what we don’t mean, express what we don’t feel. The growing entropy of AI feeding on itself already not only creates issues of social trust and conscience, it also creates technical issues. How about trying to address the entropy instead of increasing it further?

Artificial text is a statistical mashup of human quotes. When we quote AI, we quote quotes. We quote a Bircher muesli of quotes, write over it, and then feed it back into the AI system. There our input gets rehashed again. The way it currently works, AI is more likely to reach lukewarm entropy than ice-cold super-intelligence.

Using AI in the editor replaces thinking. Using AI in dialogue increases thinking. Now, how can connect the editor and the chat window without making a mess? Is there a way to keep human and artificial text apart?

The key problem: Using AI text is patchwork

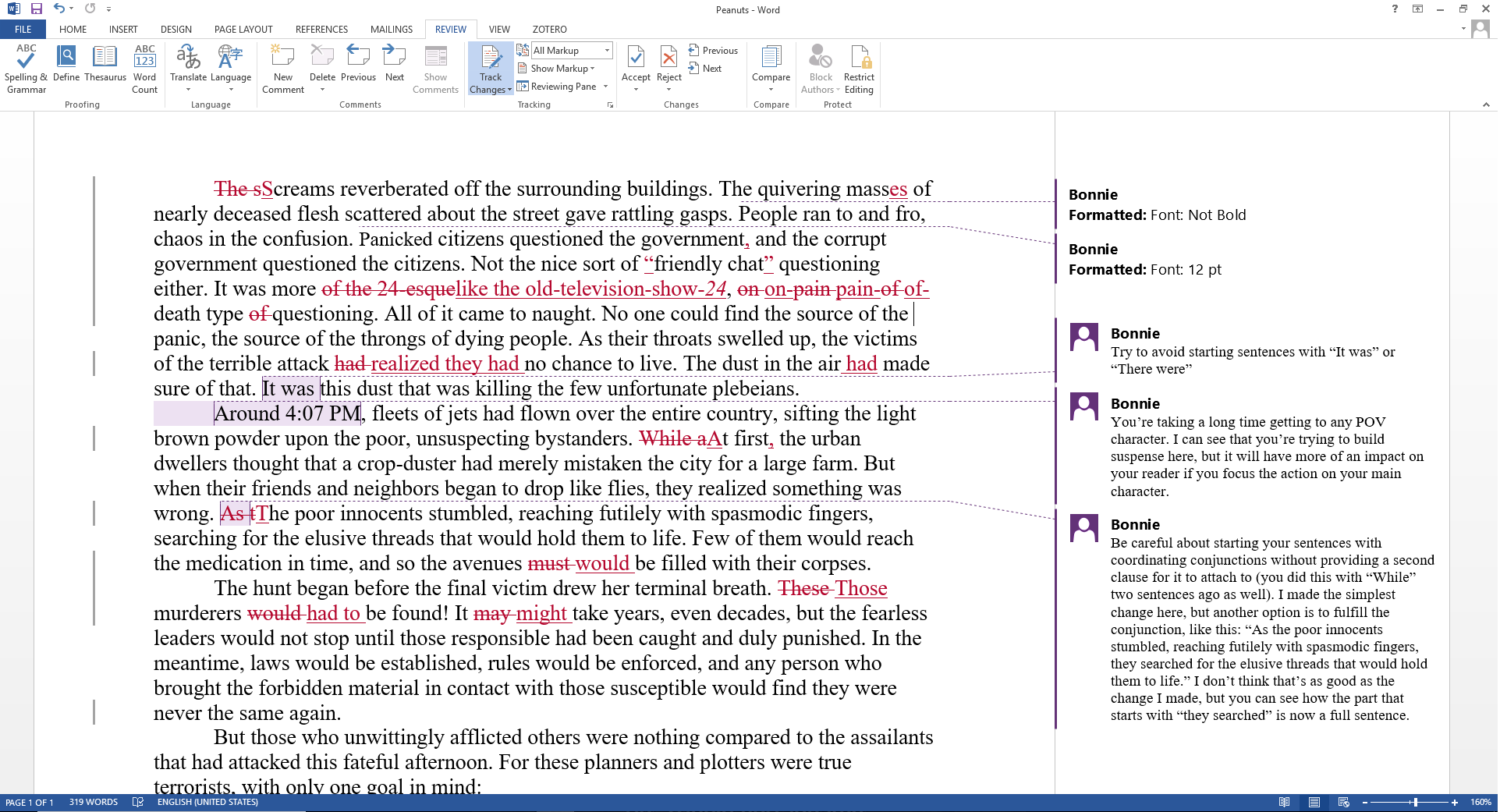

If you do use ChatGPT you will inevitably tend to blend AI-generated text with your own, blurring the lines of authorship. Here is what happens if you use ChatGPT inside a text editor:

- Write a prompt

- Edit the prompt

- Try to remember what you wrote yourself

Off voice: you won’t remember. Again: Simply avoiding AI integration doesn’t address the problem at all. People will use ChatGPT together with our apps. People will paste robot text, and then edit and forget what is theirs and what is borrowed. 7

5. What should we design?

Trust and Authorship

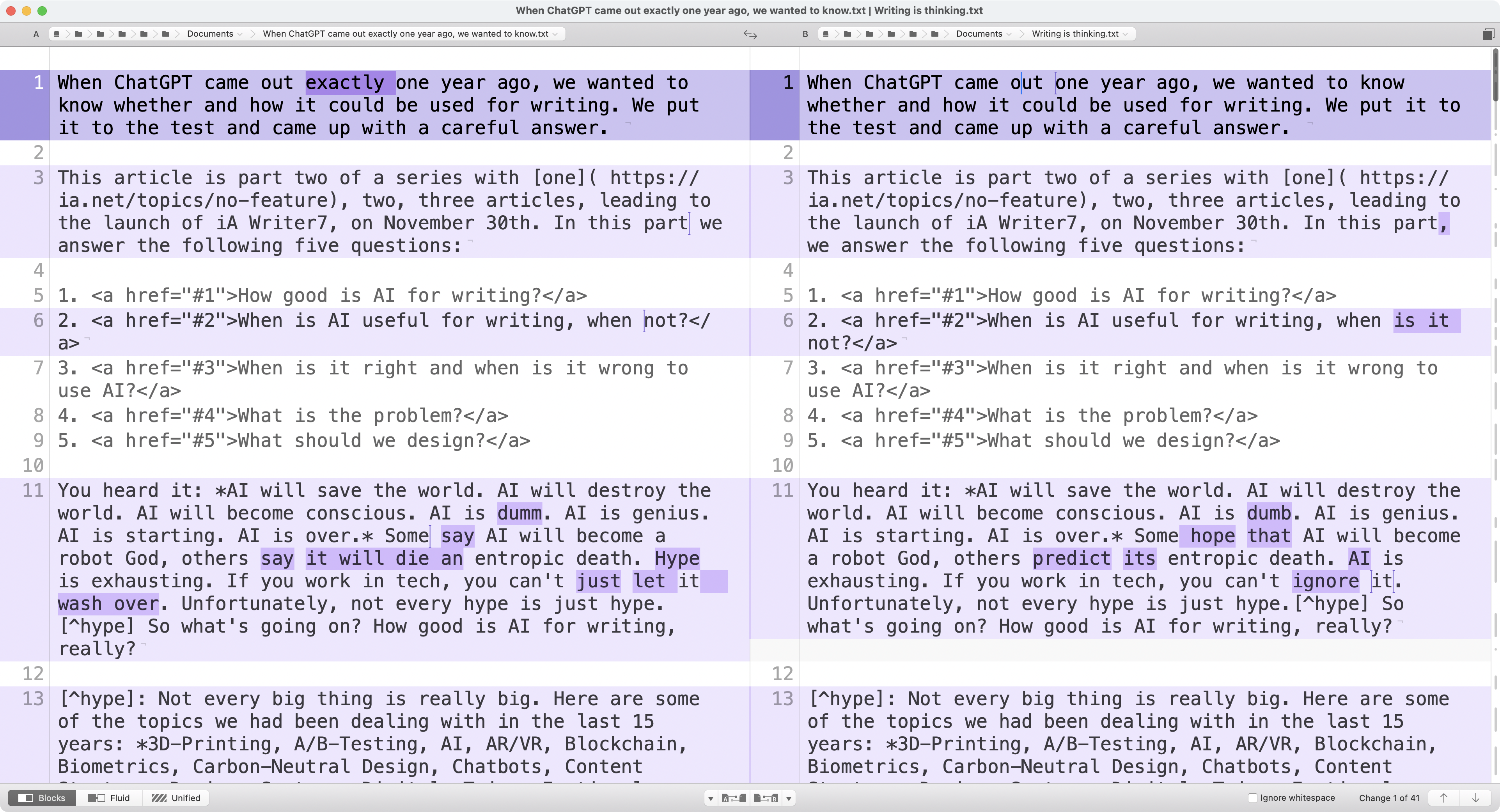

Honesty with others begins with honesty with ourselves, recognizing what is ours and what is borrowed. This starts in the writing process. We need to discern human voice and robot text. Current text editors don’t allow you to easily keep track of what is yours and what isn’t, unless you compare documents or risk high blood pressure with MS Word’s Track Changes.

Making the difference between humans and machines can’t and shouldn’t be delegated to a machine. It can’t be delegated to anyone other than the writer. It’s up to you, the writer, to be transparent about what is yours and what is not. Only you really know how much you used AI to write. Honesty is up to you. It’s up to us to make it easier for you to discern.

Keep it simple

In a time of big claims and exaggerated promises, we have to keep things simple. And that gets very hairy very quickly. We want to offer a straightforward solution for people who want to use AI and at the same time stay in control of what they write.

- How do we show the difference between artificial and human text without making a mess?

- How do we save it in plain text without adding tons of markup?

- How do we make human and artificial text discernible as we write without adding a lot of methodical, technical and graphical complexity?

At first we thought that we should simply quote AI like we quote other authors. Maybe we could use single straight quotes for AI text, or something like that. But we soon found out that markup doesn’t work at all. Quoting AI is not the same as quoting people. When we use artificial text, we don’t necessarily quote entire sentences or paragraphs. What happens is nitty-gritty patchwork. And ideally, in the end we edit all AI away. How should that work? This is a riddle we had to solve in design and code. Stay tuned to see our solution.

This article is part two of a series about the history, reason, and the design of iA Writer 7, our cautious response to AI.